Compare commits

1 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

| c6b8a2c2fc |

16

.vscode/c_cpp_properties.json

vendored

16

.vscode/c_cpp_properties.json

vendored

@ -1,16 +0,0 @@

|

|||||||

{

|

|

||||||

"configurations": [

|

|

||||||

{

|

|

||||||

"name": "Linux",

|

|

||||||

"includePath": [

|

|

||||||

"${workspaceFolder}/**"

|

|

||||||

],

|

|

||||||

"defines": [],

|

|

||||||

"compilerPath": "/usr/bin/gcc",

|

|

||||||

"cStandard": "c11",

|

|

||||||

"cppStandard": "c++14",

|

|

||||||

"intelliSenseMode": "clang-x64"

|

|

||||||

}

|

|

||||||

],

|

|

||||||

"version": 4

|

|

||||||

}

|

|

||||||

BIN

.vscode/ipch/778a17e566a4909e/mmap_address.bin

vendored

BIN

.vscode/ipch/778a17e566a4909e/mmap_address.bin

vendored

Binary file not shown.

BIN

.vscode/ipch/ccf983af1f87ec2b/mmap_address.bin

vendored

BIN

.vscode/ipch/ccf983af1f87ec2b/mmap_address.bin

vendored

Binary file not shown.

BIN

.vscode/ipch/e40aedd19a224f8d/mmap_address.bin

vendored

BIN

.vscode/ipch/e40aedd19a224f8d/mmap_address.bin

vendored

Binary file not shown.

6

.vscode/settings.json

vendored

6

.vscode/settings.json

vendored

@ -1,6 +0,0 @@

|

|||||||

{

|

|

||||||

"files.associations": {

|

|

||||||

"core": "cpp",

|

|

||||||

"sparsecore": "cpp"

|

|

||||||

}

|

|

||||||

}

|

|

||||||

@ -24,7 +24,6 @@ find_package(catkin REQUIRED COMPONENTS

|

|||||||

pcl_conversions

|

pcl_conversions

|

||||||

pcl_ros

|

pcl_ros

|

||||||

std_srvs

|

std_srvs

|

||||||

visualization_msgs

|

|

||||||

)

|

)

|

||||||

|

|

||||||

## System dependencies are found with CMake's conventions

|

## System dependencies are found with CMake's conventions

|

||||||

@ -80,7 +79,6 @@ include_directories(

|

|||||||

add_library(msckf_vio

|

add_library(msckf_vio

|

||||||

src/msckf_vio.cpp

|

src/msckf_vio.cpp

|

||||||

src/utils.cpp

|

src/utils.cpp

|

||||||

src/image_handler.cpp

|

|

||||||

)

|

)

|

||||||

add_dependencies(msckf_vio

|

add_dependencies(msckf_vio

|

||||||

${${PROJECT_NAME}_EXPORTED_TARGETS}

|

${${PROJECT_NAME}_EXPORTED_TARGETS}

|

||||||

@ -89,7 +87,6 @@ add_dependencies(msckf_vio

|

|||||||

target_link_libraries(msckf_vio

|

target_link_libraries(msckf_vio

|

||||||

${catkin_LIBRARIES}

|

${catkin_LIBRARIES}

|

||||||

${SUITESPARSE_LIBRARIES}

|

${SUITESPARSE_LIBRARIES}

|

||||||

${OpenCV_LIBRARIES}

|

|

||||||

)

|

)

|

||||||

|

|

||||||

# Msckf Vio nodelet

|

# Msckf Vio nodelet

|

||||||

@ -109,7 +106,6 @@ target_link_libraries(msckf_vio_nodelet

|

|||||||

add_library(image_processor

|

add_library(image_processor

|

||||||

src/image_processor.cpp

|

src/image_processor.cpp

|

||||||

src/utils.cpp

|

src/utils.cpp

|

||||||

src/image_handler.cpp

|

|

||||||

)

|

)

|

||||||

add_dependencies(image_processor

|

add_dependencies(image_processor

|

||||||

${${PROJECT_NAME}_EXPORTED_TARGETS}

|

${${PROJECT_NAME}_EXPORTED_TARGETS}

|

||||||

|

|||||||

30

README.md

30

README.md

@ -1,22 +1,12 @@

|

|||||||

# MSCKF\_VIO

|

# MSCKF\_VIO

|

||||||

|

|

||||||

|

|

||||||

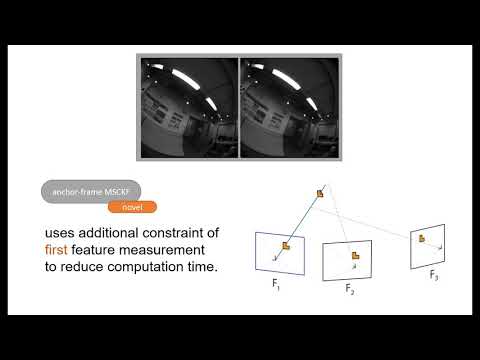

The `MSCKF_VIO` package is a stereo-photometric version of MSCKF. The software takes in synchronized stereo images and IMU messages and generates real-time 6DOF pose estimation of the IMU frame.

|

The `MSCKF_VIO` package is a stereo version of MSCKF. The software takes in synchronized stereo images and IMU messages and generates real-time 6DOF pose estimation of the IMU frame.

|

||||||

|

|

||||||

This approach is based on the paper written by Ke Sun et al.

|

The software is tested on Ubuntu 16.04 with ROS Kinetic.

|

||||||

[https://arxiv.org/abs/1712.00036](https://arxiv.org/abs/1712.00036) and their Stereo MSCKF implementation, which tightly fuse the matched feature information of a stereo image pair into a 6DOF Pose.

|

|

||||||

The approach implemented in this repository follows the semi-dense msckf approach tightly fusing the photometric

|

|

||||||

information around the matched featues into the covariance matrix, as described and derived in the master thesis [Pose Estimation using a Stereo-Photometric Multi-State Constraint Kalman Filter](http://raphael.maenle.net/resources/sp-msckf/maenle_master_thesis.pdf).

|

|

||||||

|

|

||||||

It's positioning is comparable to the approach from Ke Sun et al. with the photometric approach, with a higher

|

|

||||||

computational load, especially with larger image patches around the feature. A video explaining the approach can be

|

|

||||||

found on [https://youtu.be/HrqQywAnenQ](https://youtu.be/HrqQywAnenQ):

|

|

||||||

<br/>

|

|

||||||

[](https://www.youtube.com/watch?v=HrqQywAnenQ)

|

|

||||||

|

|

||||||

<br/>

|

|

||||||

This software should be deployed using ROS Kinetic on Ubuntu 16.04 or 18.04.

|

|

||||||

|

|

||||||

|

Video: [https://www.youtube.com/watch?v=jxfJFgzmNSw&t](https://www.youtube.com/watch?v=jxfJFgzmNSw&t=3s)<br/>

|

||||||

|

Paper Draft: [https://arxiv.org/abs/1712.00036](https://arxiv.org/abs/1712.00036)

|

||||||

|

|

||||||

## License

|

## License

|

||||||

|

|

||||||

@ -38,6 +28,16 @@ cd your_work_space

|

|||||||

catkin_make --pkg msckf_vio --cmake-args -DCMAKE_BUILD_TYPE=Release

|

catkin_make --pkg msckf_vio --cmake-args -DCMAKE_BUILD_TYPE=Release

|

||||||

```

|

```

|

||||||

|

|

||||||

|

## Calibration

|

||||||

|

|

||||||

|

An accurate calibration is crucial for successfully running the software. To get the best performance of the software, the stereo cameras and IMU should be hardware synchronized. Note that for the stereo calibration, which includes the camera intrinsics, distortion, and extrinsics between the two cameras, you have to use a calibration software. **Manually setting these parameters will not be accurate enough.** [Kalibr](https://github.com/ethz-asl/kalibr) can be used for the stereo calibration and also to get the transformation between the stereo cameras and IMU. The yaml file generated by Kalibr can be directly used in this software. See calibration files in the `config` folder for details. The two calibration files in the `config` folder should work directly with the EuRoC and [fast flight](https://github.com/KumarRobotics/msckf_vio/wiki) datasets. The convention of the calibration file is as follows:

|

||||||

|

|

||||||

|

`camx/T_cam_imu`: takes a vector from the IMU frame to the camx frame.

|

||||||

|

`cam1/T_cn_cnm1`: takes a vector from the cam0 frame to the cam1 frame.

|

||||||

|

|

||||||

|

The filter uses the first 200 IMU messages to initialize the gyro bias, acc bias, and initial orientation. Therefore, the robot is required to start from a stationary state in order to initialize the VIO successfully.

|

||||||

|

|

||||||

|

|

||||||

## EuRoC and UPenn Fast flight dataset example usage

|

## EuRoC and UPenn Fast flight dataset example usage

|

||||||

|

|

||||||

First obtain either the [EuRoC](https://projects.asl.ethz.ch/datasets/doku.php?id=kmavvisualinertialdatasets) or the [UPenn fast flight](https://github.com/KumarRobotics/msckf_vio/wiki/Dataset) dataset.

|

First obtain either the [EuRoC](https://projects.asl.ethz.ch/datasets/doku.php?id=kmavvisualinertialdatasets) or the [UPenn fast flight](https://github.com/KumarRobotics/msckf_vio/wiki/Dataset) dataset.

|

||||||

@ -75,8 +75,6 @@ To visualize the pose and feature estimates you can use the provided rviz config

|

|||||||

|

|

||||||

## ROS Nodes

|

## ROS Nodes

|

||||||

|

|

||||||

The general structure is similar to the structure of the MSCKF implementation this repository is derived from.

|

|

||||||

|

|

||||||

### `image_processor` node

|

### `image_processor` node

|

||||||

|

|

||||||

**Subscribed Topics**

|

**Subscribed Topics**

|

||||||

|

|||||||

@ -9,7 +9,7 @@ cam0:

|

|||||||

0, 0, 0, 1.000000000000000]

|

0, 0, 0, 1.000000000000000]

|

||||||

camera_model: pinhole

|

camera_model: pinhole

|

||||||

distortion_coeffs: [-0.28340811, 0.07395907, 0.00019359, 1.76187114e-05]

|

distortion_coeffs: [-0.28340811, 0.07395907, 0.00019359, 1.76187114e-05]

|

||||||

distortion_model: pre-radtan

|

distortion_model: radtan

|

||||||

intrinsics: [458.654, 457.296, 367.215, 248.375]

|

intrinsics: [458.654, 457.296, 367.215, 248.375]

|

||||||

resolution: [752, 480]

|

resolution: [752, 480]

|

||||||

timeshift_cam_imu: 0.0

|

timeshift_cam_imu: 0.0

|

||||||

@ -26,7 +26,7 @@ cam1:

|

|||||||

0, 0, 0, 1.000000000000000]

|

0, 0, 0, 1.000000000000000]

|

||||||

camera_model: pinhole

|

camera_model: pinhole

|

||||||

distortion_coeffs: [-0.28368365, 0.07451284, -0.00010473, -3.55590700e-05]

|

distortion_coeffs: [-0.28368365, 0.07451284, -0.00010473, -3.55590700e-05]

|

||||||

distortion_model: pre-radtan

|

distortion_model: radtan

|

||||||

intrinsics: [457.587, 456.134, 379.999, 255.238]

|

intrinsics: [457.587, 456.134, 379.999, 255.238]

|

||||||

resolution: [752, 480]

|

resolution: [752, 480]

|

||||||

timeshift_cam_imu: 0.0

|

timeshift_cam_imu: 0.0

|

||||||

|

|||||||

@ -1,36 +0,0 @@

|

|||||||

cam0:

|

|

||||||

T_cam_imu:

|

|

||||||

[-0.9995378259923383, 0.02917807204183088, -0.008530798463872679, 0.047094247958417004,

|

|

||||||

0.007526588843243184, -0.03435493139706542, -0.9993813532126198, -0.04788273017221637,

|

|

||||||

-0.029453096117288798, -0.9989836729399656, 0.034119442089241274, -0.0697294754693238,

|

|

||||||

0.0, 0.0, 0.0, 1.0]

|

|

||||||

camera_model: pinhole

|

|

||||||

distortion_coeffs: [0.0034823894022493434, 0.0007150348452162257, -0.0020532361418706202,

|

|

||||||

0.00020293673591811182]

|

|

||||||

distortion_model: pre-equidistant

|

|

||||||

intrinsics: [190.97847715128717, 190.9733070521226, 254.93170605935475, 256.8974428996504]

|

|

||||||

resolution: [512, 512]

|

|

||||||

rostopic: /cam0/image_raw

|

|

||||||

cam1:

|

|

||||||

T_cam_imu:

|

|

||||||

[-0.9995240747493029, 0.02986739485347808, -0.007717688852024281, -0.05374086123613335,

|

|

||||||

0.008095979457928231, 0.01256553460985914, -0.9998882749870535, -0.04648588412432889,

|

|

||||||

-0.02976708103202316, -0.9994748851595197, -0.0128013601698453, -0.07333210787623645,

|

|

||||||

0.0, 0.0, 0.0, 1.0]

|

|

||||||

T_cn_cnm1:

|

|

||||||

[0.9999994317488622, -0.0008361847221513937, -0.0006612844045898121, -0.10092123225528335,

|

|

||||||

0.0008042457277382264, 0.9988989443471681, -0.04690684567228134, -0.001964540595211977,

|

|

||||||

0.0006997790813734836, 0.04690628718225568, 0.9988990492196964, -0.0014663556043866572,

|

|

||||||

0.0, 0.0, 0.0, 1.0]

|

|

||||||

camera_model: pinhole

|

|

||||||

distortion_coeffs: [0.0034003170790442797, 0.001766278153469831, -0.00266312569781606,

|

|

||||||

0.0003299517423931039]

|

|

||||||

distortion_model: pre-equidistant

|

|

||||||

intrinsics: [190.44236969414825, 190.4344384721956, 252.59949716835982, 254.91723064636983]

|

|

||||||

resolution: [512, 512]

|

|

||||||

rostopic: /cam1/image_raw

|

|

||||||

T_imu_body:

|

|

||||||

[1.0000, 0.0000, 0.0000, 0.0000,

|

|

||||||

0.0000, 1.0000, 0.0000, 0.0000,

|

|

||||||

0.0000, 0.0000, 1.0000, 0.0000,

|

|

||||||

0.0000, 0.0000, 0.0000, 1.0000]

|

|

||||||

@ -1,36 +0,0 @@

|

|||||||

cam0:

|

|

||||||

T_cam_imu:

|

|

||||||

[-0.9995378259923383, 0.02917807204183088, -0.008530798463872679, 0.047094247958417004,

|

|

||||||

0.007526588843243184, -0.03435493139706542, -0.9993813532126198, -0.04788273017221637,

|

|

||||||

-0.029453096117288798, -0.9989836729399656, 0.034119442089241274, -0.0697294754693238,

|

|

||||||

0.0, 0.0, 0.0, 1.0]

|

|

||||||

camera_model: pinhole

|

|

||||||

distortion_coeffs: [0.010171079892421483, -0.010816440029919381, 0.005942781769412756,

|

|

||||||

-0.001662284667857643]

|

|

||||||

distortion_model: equidistant

|

|

||||||

intrinsics: [380.81042871360756, 380.81194179427075, 510.29465304840727, 514.3304630538506]

|

|

||||||

resolution: [1024, 1024]

|

|

||||||

rostopic: /cam0/image_raw

|

|

||||||

cam1:

|

|

||||||

T_cam_imu:

|

|

||||||

[-0.9995240747493029, 0.02986739485347808, -0.007717688852024281, -0.05374086123613335,

|

|

||||||

0.008095979457928231, 0.01256553460985914, -0.9998882749870535, -0.04648588412432889,

|

|

||||||

-0.02976708103202316, -0.9994748851595197, -0.0128013601698453, -0.07333210787623645,

|

|

||||||

0.0, 0.0, 0.0, 1.0]

|

|

||||||

T_cn_cnm1:

|

|

||||||

[0.9999994317488622, -0.0008361847221513937, -0.0006612844045898121, -0.10092123225528335,

|

|

||||||

0.0008042457277382264, 0.9988989443471681, -0.04690684567228134, -0.001964540595211977,

|

|

||||||

0.0006997790813734836, 0.04690628718225568, 0.9988990492196964, -0.0014663556043866572,

|

|

||||||

0.0, 0.0, 0.0, 1.0]

|

|

||||||

camera_model: pinhole

|

|

||||||

distortion_coeffs: [0.01371679169245271, -0.015567360615942622, 0.00905043103315326,

|

|

||||||

-0.002347858896562788]

|

|

||||||

distortion_model: equidistant

|

|

||||||

intrinsics: [379.2869884263036, 379.26583742214524, 505.5666703237407, 510.2840961765407]

|

|

||||||

resolution: [1024, 1024]

|

|

||||||

rostopic: /cam1/image_raw

|

|

||||||

T_imu_body:

|

|

||||||

[1.0000, 0.0000, 0.0000, 0.0000,

|

|

||||||

0.0000, 1.0000, 0.0000, 0.0000,

|

|

||||||

0.0000, 0.0000, 1.0000, 0.0000,

|

|

||||||

0.0000, 0.0000, 0.0000, 1.0000]

|

|

||||||

@ -15,37 +15,6 @@

|

|||||||

#include "imu_state.h"

|

#include "imu_state.h"

|

||||||

|

|

||||||

namespace msckf_vio {

|

namespace msckf_vio {

|

||||||

|

|

||||||

struct Frame{

|

|

||||||

cv::Mat image;

|

|

||||||

cv::Mat dximage;

|

|

||||||

cv::Mat dyimage;

|

|

||||||

double exposureTime_ms;

|

|

||||||

};

|

|

||||||

|

|

||||||

typedef std::map<StateIDType, Frame, std::less<StateIDType>,

|

|

||||||

Eigen::aligned_allocator<

|

|

||||||

std::pair<StateIDType, Frame> > > movingWindow;

|

|

||||||

|

|

||||||

struct IlluminationParameter{

|

|

||||||

double frame_bias;

|

|

||||||

double frame_gain;

|

|

||||||

double feature_bias;

|

|

||||||

double feature_gain;

|

|

||||||

};

|

|

||||||

|

|

||||||

struct CameraCalibration{

|

|

||||||

std::string distortion_model;

|

|

||||||

cv::Vec2i resolution;

|

|

||||||

cv::Vec4d intrinsics;

|

|

||||||

cv::Vec4d distortion_coeffs;

|

|

||||||

movingWindow moving_window;

|

|

||||||

cv::Mat featureVisu;

|

|

||||||

int id;

|

|

||||||

};

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

/*

|

/*

|

||||||

* @brief CAMState Stored camera state in order to

|

* @brief CAMState Stored camera state in order to

|

||||||

* form measurement model.

|

* form measurement model.

|

||||||

@ -66,9 +35,6 @@ struct CAMState {

|

|||||||

// Position of the camera frame in the world frame.

|

// Position of the camera frame in the world frame.

|

||||||

Eigen::Vector3d position;

|

Eigen::Vector3d position;

|

||||||

|

|

||||||

// Illumination Information of the frame

|

|

||||||

IlluminationParameter illumination;

|

|

||||||

|

|

||||||

// These two variables should have the same physical

|

// These two variables should have the same physical

|

||||||

// interpretation with `orientation` and `position`.

|

// interpretation with `orientation` and `position`.

|

||||||

// There two variables are used to modify the measurement

|

// There two variables are used to modify the measurement

|

||||||

|

|||||||

File diff suppressed because it is too large

Load Diff

@ -1,61 +0,0 @@

|

|||||||

#ifndef MSCKF_VIO_IMAGE_HANDLER_H

|

|

||||||

#define MSCKF_VIO_IMAGE_HANDLER_H

|

|

||||||

|

|

||||||

#include <vector>

|

|

||||||

#include <boost/shared_ptr.hpp>

|

|

||||||

#include <opencv2/opencv.hpp>

|

|

||||||

#include <opencv2/video.hpp>

|

|

||||||

|

|

||||||

#include <ros/ros.h>

|

|

||||||

#include <cv_bridge/cv_bridge.h>

|

|

||||||

|

|

||||||

|

|

||||||

namespace msckf_vio {

|

|

||||||

/*

|

|

||||||

* @brief utilities for msckf_vio

|

|

||||||

*/

|

|

||||||

namespace image_handler {

|

|

||||||

|

|

||||||

cv::Point2f pinholeDownProject(const cv::Point2f& p_in, const cv::Vec4d& intrinsics);

|

|

||||||

cv::Point2f pinholeUpProject(const cv::Point2f& p_in, const cv::Vec4d& intrinsics);

|

|

||||||

|

|

||||||

void undistortImage(

|

|

||||||

cv::InputArray src,

|

|

||||||

cv::OutputArray dst,

|

|

||||||

const std::string& distortion_model,

|

|

||||||

const cv::Vec4d& intrinsics,

|

|

||||||

const cv::Vec4d& distortion_coeffs);

|

|

||||||

|

|

||||||

void undistortPoints(

|

|

||||||

const std::vector<cv::Point2f>& pts_in,

|

|

||||||

const cv::Vec4d& intrinsics,

|

|

||||||

const std::string& distortion_model,

|

|

||||||

const cv::Vec4d& distortion_coeffs,

|

|

||||||

std::vector<cv::Point2f>& pts_out,

|

|

||||||

const cv::Matx33d &rectification_matrix = cv::Matx33d::eye(),

|

|

||||||

const cv::Vec4d &new_intrinsics = cv::Vec4d(1,1,0,0));

|

|

||||||

|

|

||||||

std::vector<cv::Point2f> distortPoints(

|

|

||||||

const std::vector<cv::Point2f>& pts_in,

|

|

||||||

const cv::Vec4d& intrinsics,

|

|

||||||

const std::string& distortion_model,

|

|

||||||

const cv::Vec4d& distortion_coeffs);

|

|

||||||

|

|

||||||

cv::Point2f distortPoint(

|

|

||||||

const cv::Point2f& pt_in,

|

|

||||||

const cv::Vec4d& intrinsics,

|

|

||||||

const std::string& distortion_model,

|

|

||||||

const cv::Vec4d& distortion_coeffs);

|

|

||||||

|

|

||||||

void undistortPoint(

|

|

||||||

const cv::Point2f& pt_in,

|

|

||||||

const cv::Vec4d& intrinsics,

|

|

||||||

const std::string& distortion_model,

|

|

||||||

const cv::Vec4d& distortion_coeffs,

|

|

||||||

cv::Point2f& pt_out,

|

|

||||||

const cv::Matx33d &rectification_matrix = cv::Matx33d::eye(),

|

|

||||||

const cv::Vec4d &new_intrinsics = cv::Vec4d(1,1,0,0));

|

|

||||||

|

|

||||||

}

|

|

||||||

}

|

|

||||||

#endif

|

|

||||||

@ -14,6 +14,10 @@

|

|||||||

#include <opencv2/opencv.hpp>

|

#include <opencv2/opencv.hpp>

|

||||||

#include <opencv2/video.hpp>

|

#include <opencv2/video.hpp>

|

||||||

|

|

||||||

|

#include <opencv2/cudaoptflow.hpp>

|

||||||

|

#include <opencv2/cudaimgproc.hpp>

|

||||||

|

#include <opencv2/cudaarithm.hpp>

|

||||||

|

|

||||||

#include <ros/ros.h>

|

#include <ros/ros.h>

|

||||||

#include <cv_bridge/cv_bridge.h>

|

#include <cv_bridge/cv_bridge.h>

|

||||||

#include <image_transport/image_transport.h>

|

#include <image_transport/image_transport.h>

|

||||||

@ -22,8 +26,6 @@

|

|||||||

#include <message_filters/subscriber.h>

|

#include <message_filters/subscriber.h>

|

||||||

#include <message_filters/time_synchronizer.h>

|

#include <message_filters/time_synchronizer.h>

|

||||||

|

|

||||||

#include "cam_state.h"

|

|

||||||

|

|

||||||

namespace msckf_vio {

|

namespace msckf_vio {

|

||||||

|

|

||||||

/*

|

/*

|

||||||

@ -310,7 +312,7 @@ private:

|

|||||||

const std::vector<unsigned char>& markers,

|

const std::vector<unsigned char>& markers,

|

||||||

std::vector<T>& refined_vec) {

|

std::vector<T>& refined_vec) {

|

||||||

if (raw_vec.size() != markers.size()) {

|

if (raw_vec.size() != markers.size()) {

|

||||||

ROS_WARN("The input size of raw_vec(%lu) and markers(%lu) does not match...",

|

ROS_WARN("The input size of raw_vec(%i) and markers(%i) does not match...",

|

||||||

raw_vec.size(), markers.size());

|

raw_vec.size(), markers.size());

|

||||||

}

|

}

|

||||||

for (int i = 0; i < markers.size(); ++i) {

|

for (int i = 0; i < markers.size(); ++i) {

|

||||||

@ -320,8 +322,6 @@ private:

|

|||||||

return;

|

return;

|

||||||

}

|

}

|

||||||

|

|

||||||

bool STREAMPAUSE;

|

|

||||||

|

|

||||||

// Indicate if this is the first image message.

|

// Indicate if this is the first image message.

|

||||||

bool is_first_img;

|

bool is_first_img;

|

||||||

|

|

||||||

@ -336,8 +336,15 @@ private:

|

|||||||

std::vector<sensor_msgs::Imu> imu_msg_buffer;

|

std::vector<sensor_msgs::Imu> imu_msg_buffer;

|

||||||

|

|

||||||

// Camera calibration parameters

|

// Camera calibration parameters

|

||||||

CameraCalibration cam0;

|

std::string cam0_distortion_model;

|

||||||

CameraCalibration cam1;

|

cv::Vec2i cam0_resolution;

|

||||||

|

cv::Vec4d cam0_intrinsics;

|

||||||

|

cv::Vec4d cam0_distortion_coeffs;

|

||||||

|

|

||||||

|

std::string cam1_distortion_model;

|

||||||

|

cv::Vec2i cam1_resolution;

|

||||||

|

cv::Vec4d cam1_intrinsics;

|

||||||

|

cv::Vec4d cam1_distortion_coeffs;

|

||||||

|

|

||||||

// Take a vector from cam0 frame to the IMU frame.

|

// Take a vector from cam0 frame to the IMU frame.

|

||||||

cv::Matx33d R_cam0_imu;

|

cv::Matx33d R_cam0_imu;

|

||||||

@ -360,6 +367,13 @@ private:

|

|||||||

boost::shared_ptr<GridFeatures> prev_features_ptr;

|

boost::shared_ptr<GridFeatures> prev_features_ptr;

|

||||||

boost::shared_ptr<GridFeatures> curr_features_ptr;

|

boost::shared_ptr<GridFeatures> curr_features_ptr;

|

||||||

|

|

||||||

|

cv::Ptr<cv::cuda::SparsePyrLKOpticalFlow> d_pyrLK_sparse;

|

||||||

|

|

||||||

|

cv::cuda::GpuMat cam0_curr_img;

|

||||||

|

cv::cuda::GpuMat cam1_curr_img;

|

||||||

|

cv::cuda::GpuMat cam0_points_gpu;

|

||||||

|

cv::cuda::GpuMat cam1_points_gpu;

|

||||||

|

|

||||||

// Number of features after each outlier removal step.

|

// Number of features after each outlier removal step.

|

||||||

int before_tracking;

|

int before_tracking;

|

||||||

int after_tracking;

|

int after_tracking;

|

||||||

|

|||||||

@ -43,50 +43,6 @@ inline void quaternionNormalize(Eigen::Vector4d& q) {

|

|||||||

return;

|

return;

|

||||||

}

|

}

|

||||||

|

|

||||||

/*

|

|

||||||

* @brief invert rotation of passed quaternion through conjugation

|

|

||||||

* and return conjugation

|

|

||||||

*/

|

|

||||||

inline Eigen::Vector4d quaternionConjugate(Eigen::Vector4d& q)

|

|

||||||

{

|

|

||||||

Eigen::Vector4d p;

|

|

||||||

p(0) = -q(0);

|

|

||||||

p(1) = -q(1);

|

|

||||||

p(2) = -q(2);

|

|

||||||

p(3) = q(3);

|

|

||||||

quaternionNormalize(p);

|

|

||||||

return p;

|

|

||||||

}

|

|

||||||

|

|

||||||

/*

|

|

||||||

* @brief converts a vector4 to a vector3, dropping (3)

|

|

||||||

* this is typically used to get the vector part of a quaternion

|

|

||||||

for eq small angle approximation

|

|

||||||

*/

|

|

||||||

inline Eigen::Vector3d v4tov3(const Eigen::Vector4d& q)

|

|

||||||

{

|

|

||||||

Eigen::Vector3d p;

|

|

||||||

p(0) = q(0);

|

|

||||||

p(1) = q(1);

|

|

||||||

p(2) = q(2);

|

|

||||||

return p;

|

|

||||||

}

|

|

||||||

|

|

||||||

/*

|

|

||||||

* @brief Perform q1 * q2

|

|

||||||

*/

|

|

||||||

|

|

||||||

inline Eigen::Vector4d QtoV(const Eigen::Quaterniond& q)

|

|

||||||

{

|

|

||||||

Eigen::Vector4d p;

|

|

||||||

p(0) = q.x();

|

|

||||||

p(1) = q.y();

|

|

||||||

p(2) = q.z();

|

|

||||||

p(3) = q.w();

|

|

||||||

return p;

|

|

||||||

}

|

|

||||||

|

|

||||||

|

|

||||||

/*

|

/*

|

||||||

* @brief Perform q1 * q2

|

* @brief Perform q1 * q2

|

||||||

*/

|

*/

|

||||||

|

|||||||

@ -14,17 +14,11 @@

|

|||||||

#include <string>

|

#include <string>

|

||||||

#include <Eigen/Dense>

|

#include <Eigen/Dense>

|

||||||

#include <Eigen/Geometry>

|

#include <Eigen/Geometry>

|

||||||

#include <math.h>

|

|

||||||

#include <boost/shared_ptr.hpp>

|

#include <boost/shared_ptr.hpp>

|

||||||

#include <opencv2/opencv.hpp>

|

|

||||||

#include <opencv2/video.hpp>

|

|

||||||

|

|

||||||

#include <ros/ros.h>

|

#include <ros/ros.h>

|

||||||

#include <sensor_msgs/Imu.h>

|

#include <sensor_msgs/Imu.h>

|

||||||

#include <sensor_msgs/Image.h>

|

|

||||||

#include <sensor_msgs/PointCloud.h>

|

|

||||||

#include <nav_msgs/Odometry.h>

|

#include <nav_msgs/Odometry.h>

|

||||||

#include <std_msgs/Float64.h>

|

|

||||||

#include <tf/transform_broadcaster.h>

|

#include <tf/transform_broadcaster.h>

|

||||||

#include <std_srvs/Trigger.h>

|

#include <std_srvs/Trigger.h>

|

||||||

|

|

||||||

@ -33,13 +27,6 @@

|

|||||||

#include "feature.hpp"

|

#include "feature.hpp"

|

||||||

#include <msckf_vio/CameraMeasurement.h>

|

#include <msckf_vio/CameraMeasurement.h>

|

||||||

|

|

||||||

#include <cv_bridge/cv_bridge.h>

|

|

||||||

#include <image_transport/image_transport.h>

|

|

||||||

#include <message_filters/subscriber.h>

|

|

||||||

#include <message_filters/time_synchronizer.h>

|

|

||||||

|

|

||||||

#define PI 3.14159265

|

|

||||||

|

|

||||||

namespace msckf_vio {

|

namespace msckf_vio {

|

||||||

/*

|

/*

|

||||||

* @brief MsckfVio Implements the algorithm in

|

* @brief MsckfVio Implements the algorithm in

|

||||||

@ -110,27 +97,11 @@ class MsckfVio {

|

|||||||

void imuCallback(const sensor_msgs::ImuConstPtr& msg);

|

void imuCallback(const sensor_msgs::ImuConstPtr& msg);

|

||||||

|

|

||||||

/*

|

/*

|

||||||

* @brief truthCallback

|

* @brief featureCallback

|

||||||

* Callback function for ground truth navigation information

|

* Callback function for feature measurements.

|

||||||

* @param TransformStamped msg

|

* @param msg Stereo feature measurements.

|

||||||

*/

|

*/

|

||||||

void truthCallback(

|

void featureCallback(const CameraMeasurementConstPtr& msg);

|

||||||

const geometry_msgs::TransformStampedPtr& msg);

|

|

||||||

|

|

||||||

|

|

||||||

/*

|

|

||||||

* @brief imageCallback

|

|

||||||

* Callback function for feature measurements

|

|

||||||

* Triggers measurement update

|

|

||||||

* @param msg

|

|

||||||

* Camera 0 Image

|

|

||||||

* Camera 1 Image

|

|

||||||

* Stereo feature measurements.

|

|

||||||

*/

|

|

||||||

void imageCallback (

|

|

||||||

const sensor_msgs::ImageConstPtr& cam0_img,

|

|

||||||

const sensor_msgs::ImageConstPtr& cam1_img,

|

|

||||||

const CameraMeasurementConstPtr& feature_msg);

|

|

||||||

|

|

||||||

/*

|

/*

|

||||||

* @brief publish Publish the results of VIO.

|

* @brief publish Publish the results of VIO.

|

||||||

@ -155,26 +126,6 @@ class MsckfVio {

|

|||||||

bool resetCallback(std_srvs::Trigger::Request& req,

|

bool resetCallback(std_srvs::Trigger::Request& req,

|

||||||

std_srvs::Trigger::Response& res);

|

std_srvs::Trigger::Response& res);

|

||||||

|

|

||||||

// Saves the exposure time and the camera measurementes

|

|

||||||

void manageMovingWindow(

|

|

||||||

const sensor_msgs::ImageConstPtr& cam0_img,

|

|

||||||

const sensor_msgs::ImageConstPtr& cam1_img,

|

|

||||||

const CameraMeasurementConstPtr& feature_msg);

|

|

||||||

|

|

||||||

|

|

||||||

void calcErrorState();

|

|

||||||

|

|

||||||

// Debug related Functions

|

|

||||||

// Propagate the true state

|

|

||||||

void batchTruthProcessing(

|

|

||||||

const double& time_bound);

|

|

||||||

|

|

||||||

|

|

||||||

void processTruthtoIMU(const double& time,

|

|

||||||

const Eigen::Vector4d& m_rot,

|

|

||||||

const Eigen::Vector3d& m_trans);

|

|

||||||

|

|

||||||

|

|

||||||

// Filter related functions

|

// Filter related functions

|

||||||

// Propogate the state

|

// Propogate the state

|

||||||

void batchImuProcessing(

|

void batchImuProcessing(

|

||||||

@ -186,12 +137,8 @@ class MsckfVio {

|

|||||||

const Eigen::Vector3d& gyro,

|

const Eigen::Vector3d& gyro,

|

||||||

const Eigen::Vector3d& acc);

|

const Eigen::Vector3d& acc);

|

||||||

|

|

||||||

// groundtruth state augmentation

|

|

||||||

void truePhotometricStateAugmentation(const double& time);

|

|

||||||

|

|

||||||

// Measurement update

|

// Measurement update

|

||||||

void stateAugmentation(const double& time);

|

void stateAugmentation(const double& time);

|

||||||

void PhotometricStateAugmentation(const double& time);

|

|

||||||

void addFeatureObservations(const CameraMeasurementConstPtr& msg);

|

void addFeatureObservations(const CameraMeasurementConstPtr& msg);

|

||||||

// This function is used to compute the measurement Jacobian

|

// This function is used to compute the measurement Jacobian

|

||||||

// for a single feature observed at a single camera frame.

|

// for a single feature observed at a single camera frame.

|

||||||

@ -202,118 +149,25 @@ class MsckfVio {

|

|||||||

Eigen::Vector4d& r);

|

Eigen::Vector4d& r);

|

||||||

// This function computes the Jacobian of all measurements viewed

|

// This function computes the Jacobian of all measurements viewed

|

||||||

// in the given camera states of this feature.

|

// in the given camera states of this feature.

|

||||||

bool featureJacobian(

|

void featureJacobian(const FeatureIDType& feature_id,

|

||||||

const FeatureIDType& feature_id,

|

|

||||||

const std::vector<StateIDType>& cam_state_ids,

|

const std::vector<StateIDType>& cam_state_ids,

|

||||||

Eigen::MatrixXd& H_x, Eigen::VectorXd& r);

|

Eigen::MatrixXd& H_x, Eigen::VectorXd& r);

|

||||||

|

|

||||||

|

|

||||||

void twodotMeasurementJacobian(

|

|

||||||

const StateIDType& cam_state_id,

|

|

||||||

const FeatureIDType& feature_id,

|

|

||||||

Eigen::MatrixXd& H_x, Eigen::MatrixXd& H_y, Eigen::VectorXd& r);

|

|

||||||

|

|

||||||

bool ConstructJacobians(

|

|

||||||

Eigen::MatrixXd& H_rho,

|

|

||||||

Eigen::MatrixXd& H_pl,

|

|

||||||

Eigen::MatrixXd& H_pA,

|

|

||||||

const Feature& feature,

|

|

||||||

const StateIDType& cam_state_id,

|

|

||||||

Eigen::MatrixXd& H_xl,

|

|

||||||

Eigen::MatrixXd& H_yl);

|

|

||||||

|

|

||||||

bool PhotometricPatchPointResidual(

|

|

||||||

const StateIDType& cam_state_id,

|

|

||||||

const Feature& feature,

|

|

||||||

Eigen::VectorXd& r);

|

|

||||||

|

|

||||||

bool PhotometricPatchPointJacobian(

|

|

||||||

const CAMState& cam_state,

|

|

||||||

const StateIDType& cam_state_id,

|

|

||||||

const Feature& feature,

|

|

||||||

Eigen::Vector3d point,

|

|

||||||

int count,

|

|

||||||

Eigen::Matrix<double, 2, 1>& H_rhoj,

|

|

||||||

Eigen::Matrix<double, 2, 6>& H_plj,

|

|

||||||

Eigen::Matrix<double, 2, 6>& H_pAj,

|

|

||||||

Eigen::Matrix<double, 2, 4>& dI_dhj);

|

|

||||||

|

|

||||||

bool PhotometricMeasurementJacobian(

|

|

||||||

const StateIDType& cam_state_id,

|

|

||||||

const FeatureIDType& feature_id,

|

|

||||||

Eigen::MatrixXd& H_x,

|

|

||||||

Eigen::MatrixXd& H_y,

|

|

||||||

Eigen::VectorXd& r);

|

|

||||||

|

|

||||||

|

|

||||||

bool twodotFeatureJacobian(

|

|

||||||

const FeatureIDType& feature_id,

|

|

||||||

const std::vector<StateIDType>& cam_state_ids,

|

|

||||||

Eigen::MatrixXd& H_x, Eigen::VectorXd& r);

|

|

||||||

|

|

||||||

bool PhotometricFeatureJacobian(

|

|

||||||

const FeatureIDType& feature_id,

|

|

||||||

const std::vector<StateIDType>& cam_state_ids,

|

|

||||||

Eigen::MatrixXd& H_x, Eigen::VectorXd& r);

|

|

||||||

|

|

||||||

void photometricMeasurementUpdate(const Eigen::MatrixXd& H, const Eigen::VectorXd& r);

|

|

||||||

void measurementUpdate(const Eigen::MatrixXd& H,

|

void measurementUpdate(const Eigen::MatrixXd& H,

|

||||||

const Eigen::VectorXd& r);

|

const Eigen::VectorXd& r);

|

||||||

void twoMeasurementUpdate(const Eigen::MatrixXd& H, const Eigen::VectorXd& r);

|

|

||||||

|

|

||||||

bool gatingTest(const Eigen::MatrixXd& H,

|

bool gatingTest(const Eigen::MatrixXd& H,

|

||||||

const Eigen::VectorXd&r, const int& dof, int filter=0);

|

const Eigen::VectorXd&r, const int& dof);

|

||||||

void removeLostFeatures();

|

void removeLostFeatures();

|

||||||

void findRedundantCamStates(

|

void findRedundantCamStates(

|

||||||

std::vector<StateIDType>& rm_cam_state_ids);

|

std::vector<StateIDType>& rm_cam_state_ids);

|

||||||

|

|

||||||

void pruneLastCamStateBuffer();

|

|

||||||

void pruneCamStateBuffer();

|

void pruneCamStateBuffer();

|

||||||

// Reset the system online if the uncertainty is too large.

|

// Reset the system online if the uncertainty is too large.

|

||||||

void onlineReset();

|

void onlineReset();

|

||||||

|

|

||||||

// Photometry flag

|

|

||||||

int FILTER;

|

|

||||||

|

|

||||||

// debug flag

|

|

||||||

bool STREAMPAUSE;

|

|

||||||

bool PRINTIMAGES;

|

|

||||||

bool GROUNDTRUTH;

|

|

||||||

|

|

||||||

bool nan_flag;

|

|

||||||

bool play;

|

|

||||||

double last_time_bound;

|

|

||||||

double time_offset;

|

|

||||||

|

|

||||||

// Patch size for Photometry

|

|

||||||

int N;

|

|

||||||

// Image rescale

|

|

||||||

int SCALE;

|

|

||||||

// Chi squared test table.

|

// Chi squared test table.

|

||||||

static std::map<int, double> chi_squared_test_table;

|

static std::map<int, double> chi_squared_test_table;

|

||||||

|

|

||||||

double eval_time;

|

|

||||||

|

|

||||||

IMUState timed_old_imu_state;

|

|

||||||

IMUState timed_old_true_state;

|

|

||||||

|

|

||||||

IMUState old_imu_state;

|

|

||||||

IMUState old_true_state;

|

|

||||||

|

|

||||||

// change in position

|

|

||||||

Eigen::Vector3d delta_position;

|

|

||||||

Eigen::Vector3d delta_orientation;

|

|

||||||

|

|

||||||

// State vector

|

// State vector

|

||||||

StateServer state_server;

|

StateServer state_server;

|

||||||

StateServer photometric_state_server;

|

|

||||||

|

|

||||||

// Ground truth state vector

|

|

||||||

StateServer true_state_server;

|

|

||||||

|

|

||||||

// error state based on ground truth

|

|

||||||

StateServer err_state_server;

|

|

||||||

|

|

||||||

// Maximum number of camera states

|

// Maximum number of camera states

|

||||||

int max_cam_state_size;

|

int max_cam_state_size;

|

||||||

|

|

||||||

@ -325,22 +179,6 @@ class MsckfVio {

|

|||||||

// transfer delay between IMU and Image messages.

|

// transfer delay between IMU and Image messages.

|

||||||

std::vector<sensor_msgs::Imu> imu_msg_buffer;

|

std::vector<sensor_msgs::Imu> imu_msg_buffer;

|

||||||

|

|

||||||

// Ground Truth message data

|

|

||||||

std::vector<geometry_msgs::TransformStamped> truth_msg_buffer;

|

|

||||||

// Moving Window buffer

|

|

||||||

movingWindow cam0_moving_window;

|

|

||||||

movingWindow cam1_moving_window;

|

|

||||||

|

|

||||||

// Camera calibration parameters

|

|

||||||

CameraCalibration cam0;

|

|

||||||

CameraCalibration cam1;

|

|

||||||

|

|

||||||

// covariance data

|

|

||||||

double irradiance_frame_bias;

|

|

||||||

|

|

||||||

|

|

||||||

ros::Time image_save_time;

|

|

||||||

|

|

||||||

// Indicate if the gravity vector is set.

|

// Indicate if the gravity vector is set.

|

||||||

bool is_gravity_set;

|

bool is_gravity_set;

|

||||||

|

|

||||||

@ -368,21 +206,12 @@ class MsckfVio {

|

|||||||

|

|

||||||

// Subscribers and publishers

|

// Subscribers and publishers

|

||||||

ros::Subscriber imu_sub;

|

ros::Subscriber imu_sub;

|

||||||

ros::Subscriber truth_sub;

|

ros::Subscriber feature_sub;

|

||||||

ros::Publisher truth_odom_pub;

|

|

||||||

ros::Publisher odom_pub;

|

ros::Publisher odom_pub;

|

||||||

ros::Publisher marker_pub;

|

|

||||||

ros::Publisher feature_pub;

|

ros::Publisher feature_pub;

|

||||||

tf::TransformBroadcaster tf_pub;

|

tf::TransformBroadcaster tf_pub;

|

||||||

ros::ServiceServer reset_srv;

|

ros::ServiceServer reset_srv;

|

||||||

|

|

||||||

|

|

||||||

message_filters::Subscriber<sensor_msgs::Image> cam0_img_sub;

|

|

||||||

message_filters::Subscriber<sensor_msgs::Image> cam1_img_sub;

|

|

||||||

message_filters::Subscriber<CameraMeasurement> feature_sub;

|

|

||||||

|

|

||||||

message_filters::TimeSynchronizer<sensor_msgs::Image, sensor_msgs::Image, CameraMeasurement> image_sub;

|

|

||||||

|

|

||||||

// Frame id

|

// Frame id

|

||||||

std::string fixed_frame_id;

|

std::string fixed_frame_id;

|

||||||

std::string child_frame_id;

|

std::string child_frame_id;

|

||||||

@ -403,9 +232,6 @@ class MsckfVio {

|

|||||||

ros::Publisher mocap_odom_pub;

|

ros::Publisher mocap_odom_pub;

|

||||||

geometry_msgs::TransformStamped raw_mocap_odom_msg;

|

geometry_msgs::TransformStamped raw_mocap_odom_msg;

|

||||||

Eigen::Isometry3d mocap_initial_frame;

|

Eigen::Isometry3d mocap_initial_frame;

|

||||||

|

|

||||||

Eigen::Vector4d mocap_initial_orientation;

|

|

||||||

Eigen::Vector3d mocap_initial_position;

|

|

||||||

};

|

};

|

||||||

|

|

||||||

typedef MsckfVio::Ptr MsckfVioPtr;

|

typedef MsckfVio::Ptr MsckfVioPtr;

|

||||||

|

|||||||

@ -11,6 +11,9 @@

|

|||||||

#include <ros/ros.h>

|

#include <ros/ros.h>

|

||||||

#include <string>

|

#include <string>

|

||||||

#include <opencv2/core/core.hpp>

|

#include <opencv2/core/core.hpp>

|

||||||

|

#include <opencv2/cudaoptflow.hpp>

|

||||||

|

#include <opencv2/cudaimgproc.hpp>

|

||||||

|

#include <opencv2/cudaarithm.hpp>

|

||||||

#include <Eigen/Geometry>

|

#include <Eigen/Geometry>

|

||||||

|

|

||||||

namespace msckf_vio {

|

namespace msckf_vio {

|

||||||

@ -18,6 +21,10 @@ namespace msckf_vio {

|

|||||||

* @brief utilities for msckf_vio

|

* @brief utilities for msckf_vio

|

||||||

*/

|

*/

|

||||||

namespace utils {

|

namespace utils {

|

||||||

|

|

||||||

|

void download(const cv::cuda::GpuMat& d_mat, std::vector<uchar>& vec);

|

||||||

|

void download(const cv::cuda::GpuMat& d_mat, std::vector<cv::Point2f>& vec);

|

||||||

|

|

||||||

Eigen::Isometry3d getTransformEigen(const ros::NodeHandle &nh,

|

Eigen::Isometry3d getTransformEigen(const ros::NodeHandle &nh,

|

||||||

const std::string &field);

|

const std::string &field);

|

||||||

|

|

||||||

|

|||||||

@ -8,8 +8,7 @@

|

|||||||

<group ns="$(arg robot)">

|

<group ns="$(arg robot)">

|

||||||

<node pkg="nodelet" type="nodelet" name="image_processor"

|

<node pkg="nodelet" type="nodelet" name="image_processor"

|

||||||

args="standalone msckf_vio/ImageProcessorNodelet"

|

args="standalone msckf_vio/ImageProcessorNodelet"

|

||||||

output="screen"

|

output="screen">

|

||||||

>

|

|

||||||

|

|

||||||

<rosparam command="load" file="$(arg calibration_file)"/>

|

<rosparam command="load" file="$(arg calibration_file)"/>

|

||||||

<param name="grid_row" value="4"/>

|

<param name="grid_row" value="4"/>

|

||||||

|

|||||||

@ -1,33 +0,0 @@

|

|||||||

<launch>

|

|

||||||

|

|

||||||

<arg name="robot" default="firefly_sbx"/>

|

|

||||||

<arg name="calibration_file"

|

|

||||||

default="$(find msckf_vio)/config/camchain-imucam-mynt.yaml"/>

|

|

||||||

|

|

||||||

<!-- Image Processor Nodelet -->

|

|

||||||

<group ns="$(arg robot)">

|

|

||||||

<node pkg="nodelet" type="nodelet" name="image_processor"

|

|

||||||

args="standalone msckf_vio/ImageProcessorNodelet"

|

|

||||||

output="screen">

|

|

||||||

|

|

||||||

<rosparam command="load" file="$(arg calibration_file)"/>

|

|

||||||

<param name="grid_row" value="4"/>

|

|

||||||

<param name="grid_col" value="5"/>

|

|

||||||

<param name="grid_min_feature_num" value="3"/>

|

|

||||||

<param name="grid_max_feature_num" value="4"/>

|

|

||||||

<param name="pyramid_levels" value="3"/>

|

|

||||||

<param name="patch_size" value="15"/>

|

|

||||||

<param name="fast_threshold" value="10"/>

|

|

||||||

<param name="max_iteration" value="30"/>

|

|

||||||

<param name="track_precision" value="0.01"/>

|

|

||||||

<param name="ransac_threshold" value="3"/>

|

|

||||||

<param name="stereo_threshold" value="5"/>

|

|

||||||

|

|

||||||

<remap from="~imu" to="/imu0"/>

|

|

||||||

<remap from="~cam0_image" to="/mynteye/left/image_raw"/>

|

|

||||||

<remap from="~cam1_image" to="/mynteye/right/image_raw"/>

|

|

||||||

|

|

||||||

</node>

|

|

||||||

</group>

|

|

||||||

|

|

||||||

</launch>

|

|

||||||

@ -1,38 +0,0 @@

|

|||||||

<launch>

|

|

||||||

|

|

||||||

<arg name="robot" default="firefly_sbx"/>

|

|

||||||

<arg name="calibration_file"

|

|

||||||

default="$(find msckf_vio)/config/camchain-imucam-tum-scaled.yaml"/>

|

|

||||||

|

|

||||||

<!-- Image Processor Nodelet -->

|

|

||||||

<group ns="$(arg robot)">

|

|

||||||

<node pkg="nodelet" type="nodelet" name="image_processor"

|

|

||||||

args="standalone msckf_vio/ImageProcessorNodelet"

|

|

||||||

output="screen"

|

|

||||||

>

|

|

||||||

|

|

||||||

<!-- Debugging Flaggs -->

|

|

||||||

<param name="StreamPause" value="true"/>

|

|

||||||

|

|

||||||

<rosparam command="load" file="$(arg calibration_file)"/>

|

|

||||||

<param name="grid_row" value="4"/>

|

|

||||||

<param name="grid_col" value="4"/>

|

|

||||||

<param name="grid_min_feature_num" value="3"/>

|

|

||||||

<param name="grid_max_feature_num" value="5"/>

|

|

||||||

<param name="pyramid_levels" value="3"/>

|

|

||||||

<param name="patch_size" value="15"/>

|

|

||||||

<param name="fast_threshold" value="10"/>

|

|

||||||

<param name="max_iteration" value="30"/>

|

|

||||||

<param name="track_precision" value="0.01"/>

|

|

||||||

<param name="ransac_threshold" value="3"/>

|

|

||||||

<param name="stereo_threshold" value="5"/>

|

|

||||||

|

|

||||||

<remap from="~imu" to="/imu0"/>

|

|

||||||

<remap from="~cam0_image" to="/cam0/image_raw"/>

|

|

||||||

<remap from="~cam1_image" to="/cam1/image_raw"/>

|

|

||||||

|

|

||||||

|

|

||||||

</node>

|

|

||||||

</group>

|

|

||||||

|

|

||||||

</launch>

|

|

||||||

@ -1,37 +0,0 @@

|

|||||||

<launch>

|

|

||||||

|

|

||||||

<arg name="robot" default="firefly_sbx"/>

|

|

||||||

<arg name="calibration_file"

|

|

||||||

default="$(find msckf_vio)/config/camchain-imucam-tum.yaml"/>

|

|

||||||

|

|

||||||

<!-- Image Processor Nodelet -->

|

|

||||||

<group ns="$(arg robot)">

|

|

||||||

<node pkg="nodelet" type="nodelet" name="image_processor"

|

|

||||||

args="standalone msckf_vio/ImageProcessorNodelet"

|

|

||||||

output="screen"

|

|

||||||

>

|

|

||||||

|

|

||||||

<!-- Debugging Flaggs -->

|

|

||||||

<param name="StreamPause" value="true"/>

|

|

||||||

|

|

||||||

<rosparam command="load" file="$(arg calibration_file)"/>

|

|

||||||

<param name="grid_row" value="4"/>

|

|

||||||

<param name="grid_col" value="4"/>

|

|

||||||

<param name="grid_min_feature_num" value="3"/>

|

|

||||||

<param name="grid_max_feature_num" value="4"/>

|

|

||||||

<param name="pyramid_levels" value="3"/>

|

|

||||||

<param name="patch_size" value="15"/>

|

|

||||||

<param name="fast_threshold" value="10"/>

|

|

||||||

<param name="max_iteration" value="30"/>

|

|

||||||

<param name="track_precision" value="0.01"/>

|

|

||||||

<param name="ransac_threshold" value="3"/>

|

|

||||||

<param name="stereo_threshold" value="5"/>

|

|

||||||

|

|

||||||

<remap from="~imu" to="/imu0"/>

|

|

||||||

<remap from="~cam0_image" to="/cam0/image_raw"/>

|

|

||||||

<remap from="~cam1_image" to="/cam1/image_raw"/>

|

|

||||||

|

|

||||||

</node>

|

|

||||||

</group>

|

|

||||||

|

|

||||||

</launch>

|

|

||||||

@ -1,75 +0,0 @@

|

|||||||

<launch>

|

|

||||||

|

|

||||||

<arg name="robot" default="firefly_sbx"/>

|

|

||||||

<arg name="fixed_frame_id" default="world"/>

|

|

||||||

<arg name="calibration_file"

|

|

||||||

default="$(find msckf_vio)/config/camchain-imucam-tum.yaml"/>

|

|

||||||

|

|

||||||

<!-- Image Processor Nodelet -->

|

|

||||||

<include file="$(find msckf_vio)/launch/image_processor_tum.launch">

|

|

||||||

<arg name="robot" value="$(arg robot)"/>

|

|

||||||

<arg name="calibration_file" value="$(arg calibration_file)"/>

|

|

||||||

</include>

|

|

||||||

|

|

||||||

<!-- Msckf Vio Nodelet -->

|

|

||||||

<group ns="$(arg robot)">

|

|

||||||

<node pkg="nodelet" type="nodelet" name="vio"

|

|

||||||

args='standalone msckf_vio/MsckfVioNodelet'

|

|

||||||

output="screen"

|

|

||||||

launch-prefix="xterm -e gdb --args">

|

|

||||||

|

|

||||||

<!-- Photometry Flag-->

|

|

||||||

<param name="PHOTOMETRIC" value="true"/>

|

|

||||||

|

|

||||||

<!-- Debugging Flaggs -->

|

|

||||||

<param name="PrintImages" value="true"/>

|

|

||||||

<param name="GroundTruth" value="false"/>

|

|

||||||

|

|

||||||

<param name="patch_size_n" value="3"/>

|

|

||||||

|

|

||||||

<!-- Calibration parameters -->

|

|

||||||

<rosparam command="load" file="$(arg calibration_file)"/>

|

|

||||||

|

|

||||||

<param name="publish_tf" value="true"/>

|

|

||||||

<param name="frame_rate" value="20"/>

|

|

||||||

<param name="fixed_frame_id" value="$(arg fixed_frame_id)"/>

|

|

||||||

<param name="child_frame_id" value="odom"/>

|

|

||||||

<param name="max_cam_state_size" value="20"/>

|

|

||||||

<param name="position_std_threshold" value="8.0"/>

|

|

||||||

|

|

||||||

<param name="rotation_threshold" value="0.2618"/>

|

|

||||||

<param name="translation_threshold" value="0.4"/>

|

|

||||||

<param name="tracking_rate_threshold" value="0.5"/>

|

|

||||||

|

|

||||||

<!-- Feature optimization config -->

|

|

||||||

<param name="feature/config/translation_threshold" value="-1.0"/>

|

|

||||||

|

|

||||||

<!-- These values should be standard deviation -->

|

|

||||||

<param name="noise/gyro" value="0.005"/>

|

|

||||||

<param name="noise/acc" value="0.05"/>

|

|

||||||

<param name="noise/gyro_bias" value="0.001"/>

|

|

||||||

<param name="noise/acc_bias" value="0.01"/>

|

|

||||||

<param name="noise/feature" value="0.035"/>

|

|

||||||

|

|

||||||

<param name="initial_state/velocity/x" value="0.0"/>

|

|

||||||

<param name="initial_state/velocity/y" value="0.0"/>

|

|

||||||

<param name="initial_state/velocity/z" value="0.0"/>

|

|

||||||

|

|

||||||

<!-- These values should be covariance -->

|

|

||||||

<param name="initial_covariance/velocity" value="0.25"/>

|

|

||||||

<param name="initial_covariance/gyro_bias" value="0.01"/>

|

|

||||||

<param name="initial_covariance/acc_bias" value="0.01"/>

|

|

||||||

<param name="initial_covariance/extrinsic_rotation_cov" value="3.0462e-4"/>

|

|

||||||

<param name="initial_covariance/extrinsic_translation_cov" value="2.5e-5"/>

|

|

||||||

<param name="initial_covariance/irradiance_frame_bias" value="0.1"/>

|

|

||||||

|

|

||||||

<remap from="~imu" to="/imu0"/>

|

|

||||||

<remap from="~cam0_image" to="/cam0/image_raw"/>

|

|

||||||

<remap from="~cam1_image" to="/cam1/image_raw"/>

|

|

||||||

|

|

||||||

<remap from="~features" to="image_processor/features"/>

|

|

||||||

|

|

||||||

</node>

|

|

||||||

</group>

|

|

||||||

|

|

||||||

</launch>

|

|

||||||

@ -17,18 +17,6 @@

|

|||||||

args='standalone msckf_vio/MsckfVioNodelet'

|

args='standalone msckf_vio/MsckfVioNodelet'

|

||||||

output="screen">

|

output="screen">

|

||||||

|

|

||||||

|

|

||||||

<!-- Filter Flag, 0 = msckf, 1 = photometric, 2 = two -->

|

|

||||||

<param name="FILTER" value="1"/>

|

|

||||||

|

|

||||||

<!-- Debugging Flaggs -->

|

|

||||||

<param name="StreamPause" value="true"/>

|

|

||||||

<param name="PrintImages" value="true"/>

|

|

||||||

<param name="GroundTruth" value="false"/>

|

|

||||||

|

|

||||||

<param name="patch_size_n" value="5"/>

|

|

||||||

<param name="image_scale" value ="1"/>

|

|

||||||

|

|

||||||

<!-- Calibration parameters -->

|

<!-- Calibration parameters -->

|

||||||

<rosparam command="load" file="$(arg calibration_file)"/>

|

<rosparam command="load" file="$(arg calibration_file)"/>

|

||||||

|

|

||||||

@ -65,9 +53,6 @@

|

|||||||

<param name="initial_covariance/extrinsic_translation_cov" value="2.5e-5"/>

|

<param name="initial_covariance/extrinsic_translation_cov" value="2.5e-5"/>

|

||||||

|

|

||||||

<remap from="~imu" to="/imu0"/>

|

<remap from="~imu" to="/imu0"/>

|

||||||

<remap from="~cam0_image" to="/cam0/image_raw"/>

|

|

||||||

<remap from="~cam1_image" to="/cam1/image_raw"/>

|

|

||||||

|

|

||||||

<remap from="~features" to="image_processor/features"/>

|

<remap from="~features" to="image_processor/features"/>

|

||||||

|

|

||||||

</node>

|

</node>

|

||||||

|

|||||||

@ -1,61 +0,0 @@

|

|||||||

<launch>

|

|

||||||

|

|

||||||

<arg name="robot" default="firefly_sbx"/>

|

|

||||||

<arg name="fixed_frame_id" default="world"/>

|

|

||||||

<arg name="calibration_file"

|

|

||||||

default="$(find msckf_vio)/config/camchain-imucam-mynt.yaml"/>

|

|

||||||

|

|

||||||

<!-- Image Processor Nodelet -->

|

|

||||||

<include file="$(find msckf_vio)/launch/image_processor_mynt.launch">

|

|

||||||

<arg name="robot" value="$(arg robot)"/>

|

|

||||||

<arg name="calibration_file" value="$(arg calibration_file)"/>

|

|

||||||

</include>

|

|

||||||

|

|

||||||

<!-- Msckf Vio Nodelet -->

|

|

||||||

<group ns="$(arg robot)">

|

|

||||||

<node pkg="nodelet" type="nodelet" name="vio"

|

|

||||||

args='standalone msckf_vio/MsckfVioNodelet'

|

|

||||||

output="screen">

|

|

||||||

|

|

||||||

<!-- Calibration parameters -->

|

|

||||||

<rosparam command="load" file="$(arg calibration_file)"/>

|

|

||||||

|

|

||||||

<param name="publish_tf" value="true"/>

|

|

||||||

<param name="frame_rate" value="20"/>

|

|

||||||

<param name="fixed_frame_id" value="$(arg fixed_frame_id)"/>

|

|

||||||

<param name="child_frame_id" value="odom"/>

|

|

||||||

<param name="max_cam_state_size" value="20"/>

|

|

||||||

<param name="position_std_threshold" value="8.0"/>

|

|

||||||

|

|

||||||

<param name="rotation_threshold" value="0.2618"/>

|

|

||||||

<param name="translation_threshold" value="0.4"/>

|

|

||||||

<param name="tracking_rate_threshold" value="0.5"/>

|

|

||||||

|

|

||||||

<!-- Feature optimization config -->

|

|

||||||

<param name="feature/config/translation_threshold" value="-1.0"/>

|

|

||||||

|

|

||||||

<!-- These values should be standard deviation -->

|

|

||||||

<param name="noise/gyro" value="0.005"/>

|

|

||||||

<param name="noise/acc" value="0.05"/>

|

|

||||||

<param name="noise/gyro_bias" value="0.001"/>

|

|

||||||

<param name="noise/acc_bias" value="0.01"/>

|

|

||||||

<param name="noise/feature" value="0.035"/>

|

|

||||||

|

|

||||||

<param name="initial_state/velocity/x" value="0.0"/>

|

|

||||||

<param name="initial_state/velocity/y" value="0.0"/>

|

|

||||||

<param name="initial_state/velocity/z" value="0.0"/>

|

|

||||||

|

|

||||||

<!-- These values should be covariance -->

|

|

||||||

<param name="initial_covariance/velocity" value="0.25"/>

|

|

||||||

<param name="initial_covariance/gyro_bias" value="0.01"/>

|

|

||||||

<param name="initial_covariance/acc_bias" value="0.01"/>

|

|

||||||

<param name="initial_covariance/extrinsic_rotation_cov" value="3.0462e-4"/>

|

|

||||||

<param name="initial_covariance/extrinsic_translation_cov" value="2.5e-5"/>

|

|

||||||

|

|

||||||

<remap from="~imu" to="/mynteye/imu/data_raw"/>

|

|

||||||

<remap from="~features" to="image_processor/features"/>

|

|

||||||

|

|

||||||

</node>

|

|

||||||

</group>

|

|

||||||

|

|

||||||

</launch>

|

|

||||||

@ -1,77 +0,0 @@

|

|||||||

<launch>

|

|

||||||

|

|

||||||

<arg name="robot" default="firefly_sbx"/>

|

|

||||||

<arg name="fixed_frame_id" default="world"/>

|

|

||||||

<arg name="calibration_file"

|

|

||||||

default="$(find msckf_vio)/config/camchain-imucam-tum-scaled.yaml"/>

|

|

||||||

|

|

||||||

<!-- Image Processor Nodelet -->

|

|

||||||

<include file="$(find msckf_vio)/launch/image_processor_tinytum.launch">

|

|

||||||

<arg name="robot" value="$(arg robot)"/>

|

|

||||||

<arg name="calibration_file" value="$(arg calibration_file)"/>

|

|

||||||

</include>

|

|

||||||

|

|

||||||

<!-- Msckf Vio Nodelet -->

|

|

||||||

<group ns="$(arg robot)">

|

|

||||||

<node pkg="nodelet" type="nodelet" name="vio"

|

|

||||||

args='standalone msckf_vio/MsckfVioNodelet'

|

|

||||||

output="screen">

|

|

||||||

|

|

||||||

<param name="FILTER" value="0"/>

|

|

||||||

|

|

||||||

<!-- Debugging Flaggs -->

|

|

||||||

<param name="StreamPause" value="true"/>

|

|

||||||

<param name="PrintImages" value="false"/>

|

|

||||||

<param name="GroundTruth" value="false"/>

|

|

||||||

|

|

||||||

<param name="patch_size_n" value="3"/>

|

|

||||||

<param name="image_scale" value ="1"/>

|

|

||||||

|

|

||||||

<!-- Calibration parameters -->

|

|

||||||

<rosparam command="load" file="$(arg calibration_file)"/>

|

|

||||||

|

|

||||||

<param name="publish_tf" value="true"/>

|

|

||||||

<param name="frame_rate" value="20"/>

|

|

||||||

<param name="fixed_frame_id" value="$(arg fixed_frame_id)"/>

|

|

||||||

<param name="child_frame_id" value="odom"/>

|

|

||||||

<param name="max_cam_state_size" value="12"/>

|

|

||||||

<param name="position_std_threshold" value="8.0"/>

|

|

||||||

|

|

||||||

<param name="rotation_threshold" value="0.2618"/>

|

|

||||||

<param name="translation_threshold" value="0.4"/>

|

|

||||||

<param name="tracking_rate_threshold" value="0.5"/>

|

|

||||||

|

|

||||||

<!-- Feature optimization config -->

|

|

||||||

<param name="feature/config/translation_threshold" value="-1.0"/>

|

|

||||||

|

|

||||||

<!-- These values should be standard deviation -->

|

|

||||||

<param name="noise/gyro" value="0.005"/>

|

|

||||||

<param name="noise/acc" value="0.05"/>

|

|

||||||

<param name="noise/gyro_bias" value="0.001"/>

|

|

||||||

<param name="noise/acc_bias" value="0.01"/>

|

|

||||||

<param name="noise/feature" value="0.035"/>

|

|

||||||

|

|

||||||

<param name="initial_state/velocity/x" value="0.0"/>

|

|

||||||

<param name="initial_state/velocity/y" value="0.0"/>

|

|

||||||

<param name="initial_state/velocity/z" value="0.0"/>

|

|

||||||

|

|

||||||

<!-- These values should be covariance -->

|

|

||||||

<param name="initial_covariance/velocity" value="0.25"/>

|

|

||||||

<param name="initial_covariance/gyro_bias" value="0.01"/>

|

|

||||||

<param name="initial_covariance/acc_bias" value="0.01"/>