Compare commits

23 Commits

5b87d5b3bb

...

master

| Author | SHA1 | Date | |

|---|---|---|---|

| 43ac48bf56 | |||

| e9d801bdc1 | |||

| 4a5bdc735c | |||

| 9253fcab5f | |||

| 69d385f257 | |||

| 62cd89fd0d | |||

| a8090ca58a | |||

| ed2ba61828 | |||

| e94d4a06b5 | |||

| 0ef71b9220 | |||

| 5a80f97b68 | |||

| 876fa7617c | |||

| 14825c344e | |||

| 02156bd89a | |||

| 5451c2d097 | |||

| b3e86b3e64 | |||

| ebc73c0c5e | |||

| 273bbf8a94 | |||

| 1d6100ed13 | |||

| 6f7f8b7892 | |||

| c565033d7f | |||

| 4842c175a5 | |||

| 9ded72a366 |

30

README.md

30

README.md

@ -1,12 +1,22 @@

|

|||||||

# MSCKF\_VIO

|

# MSCKF\_VIO

|

||||||

|

|

||||||

|

|

||||||

The `MSCKF_VIO` package is a stereo version of MSCKF. The software takes in synchronized stereo images and IMU messages and generates real-time 6DOF pose estimation of the IMU frame.

|

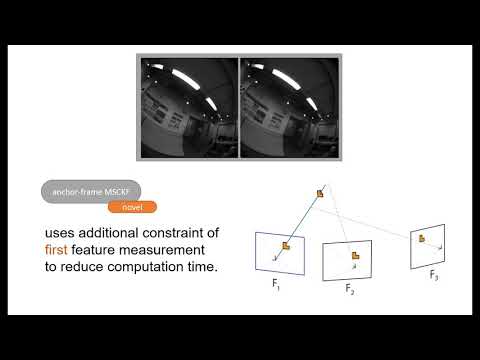

The `MSCKF_VIO` package is a stereo-photometric version of MSCKF. The software takes in synchronized stereo images and IMU messages and generates real-time 6DOF pose estimation of the IMU frame.

|

||||||

|

|

||||||

The software is tested on Ubuntu 16.04 with ROS Kinetic.

|

This approach is based on the paper written by Ke Sun et al.

|

||||||

|

[https://arxiv.org/abs/1712.00036](https://arxiv.org/abs/1712.00036) and their Stereo MSCKF implementation, which tightly fuse the matched feature information of a stereo image pair into a 6DOF Pose.

|

||||||

|

The approach implemented in this repository follows the semi-dense msckf approach tightly fusing the photometric

|

||||||

|

information around the matched featues into the covariance matrix, as described and derived in the master thesis [Pose Estimation using a Stereo-Photometric Multi-State Constraint Kalman Filter](http://raphael.maenle.net/resources/sp-msckf/maenle_master_thesis.pdf).

|

||||||

|

|

||||||

|

It's positioning is comparable to the approach from Ke Sun et al. with the photometric approach, with a higher

|

||||||

|

computational load, especially with larger image patches around the feature. A video explaining the approach can be

|

||||||

|

found on [https://youtu.be/HrqQywAnenQ](https://youtu.be/HrqQywAnenQ):

|

||||||

|

<br/>

|

||||||

|

[](https://www.youtube.com/watch?v=HrqQywAnenQ)

|

||||||

|

|

||||||

|

<br/>

|

||||||

|

This software should be deployed using ROS Kinetic on Ubuntu 16.04 or 18.04.

|

||||||

|

|

||||||

Video: [https://www.youtube.com/watch?v=jxfJFgzmNSw&t](https://www.youtube.com/watch?v=jxfJFgzmNSw&t=3s)<br/>

|

|

||||||

Paper Draft: [https://arxiv.org/abs/1712.00036](https://arxiv.org/abs/1712.00036)

|

|

||||||

|

|

||||||

## License

|

## License

|

||||||

|

|

||||||

@ -28,16 +38,6 @@ cd your_work_space

|

|||||||

catkin_make --pkg msckf_vio --cmake-args -DCMAKE_BUILD_TYPE=Release

|

catkin_make --pkg msckf_vio --cmake-args -DCMAKE_BUILD_TYPE=Release

|

||||||

```

|

```

|

||||||

|

|

||||||

## Calibration

|

|

||||||

|

|

||||||

An accurate calibration is crucial for successfully running the software. To get the best performance of the software, the stereo cameras and IMU should be hardware synchronized. Note that for the stereo calibration, which includes the camera intrinsics, distortion, and extrinsics between the two cameras, you have to use a calibration software. **Manually setting these parameters will not be accurate enough.** [Kalibr](https://github.com/ethz-asl/kalibr) can be used for the stereo calibration and also to get the transformation between the stereo cameras and IMU. The yaml file generated by Kalibr can be directly used in this software. See calibration files in the `config` folder for details. The two calibration files in the `config` folder should work directly with the EuRoC and [fast flight](https://github.com/KumarRobotics/msckf_vio/wiki) datasets. The convention of the calibration file is as follows:

|

|

||||||

|

|

||||||

`camx/T_cam_imu`: takes a vector from the IMU frame to the camx frame.

|

|

||||||

`cam1/T_cn_cnm1`: takes a vector from the cam0 frame to the cam1 frame.

|

|

||||||

|

|

||||||

The filter uses the first 200 IMU messages to initialize the gyro bias, acc bias, and initial orientation. Therefore, the robot is required to start from a stationary state in order to initialize the VIO successfully.

|

|

||||||

|

|

||||||

|

|

||||||

## EuRoC and UPenn Fast flight dataset example usage

|

## EuRoC and UPenn Fast flight dataset example usage

|

||||||

|

|

||||||

First obtain either the [EuRoC](https://projects.asl.ethz.ch/datasets/doku.php?id=kmavvisualinertialdatasets) or the [UPenn fast flight](https://github.com/KumarRobotics/msckf_vio/wiki/Dataset) dataset.

|

First obtain either the [EuRoC](https://projects.asl.ethz.ch/datasets/doku.php?id=kmavvisualinertialdatasets) or the [UPenn fast flight](https://github.com/KumarRobotics/msckf_vio/wiki/Dataset) dataset.

|

||||||

@ -75,6 +75,8 @@ To visualize the pose and feature estimates you can use the provided rviz config

|

|||||||

|

|

||||||

## ROS Nodes

|

## ROS Nodes

|

||||||

|

|

||||||

|

The general structure is similar to the structure of the MSCKF implementation this repository is derived from.

|

||||||

|

|

||||||

### `image_processor` node

|

### `image_processor` node

|

||||||

|

|

||||||

**Subscribed Topics**

|

**Subscribed Topics**

|

||||||

|

|||||||

@ -9,7 +9,7 @@ cam0:

|

|||||||

0, 0, 0, 1.000000000000000]

|

0, 0, 0, 1.000000000000000]

|

||||||

camera_model: pinhole

|

camera_model: pinhole

|

||||||

distortion_coeffs: [-0.28340811, 0.07395907, 0.00019359, 1.76187114e-05]

|

distortion_coeffs: [-0.28340811, 0.07395907, 0.00019359, 1.76187114e-05]

|

||||||

distortion_model: radtan

|

distortion_model: pre-radtan

|

||||||

intrinsics: [458.654, 457.296, 367.215, 248.375]

|

intrinsics: [458.654, 457.296, 367.215, 248.375]

|

||||||

resolution: [752, 480]

|

resolution: [752, 480]

|

||||||

timeshift_cam_imu: 0.0

|

timeshift_cam_imu: 0.0

|

||||||

@ -26,7 +26,7 @@ cam1:

|

|||||||

0, 0, 0, 1.000000000000000]

|

0, 0, 0, 1.000000000000000]

|

||||||

camera_model: pinhole

|

camera_model: pinhole

|

||||||

distortion_coeffs: [-0.28368365, 0.07451284, -0.00010473, -3.55590700e-05]

|

distortion_coeffs: [-0.28368365, 0.07451284, -0.00010473, -3.55590700e-05]

|

||||||

distortion_model: radtan

|

distortion_model: pre-radtan

|

||||||

intrinsics: [457.587, 456.134, 379.999, 255.238]

|

intrinsics: [457.587, 456.134, 379.999, 255.238]

|

||||||

resolution: [752, 480]

|

resolution: [752, 480]

|

||||||

timeshift_cam_imu: 0.0

|

timeshift_cam_imu: 0.0

|

||||||

|

|||||||

@ -7,7 +7,7 @@ cam0:

|

|||||||

camera_model: pinhole

|

camera_model: pinhole

|

||||||

distortion_coeffs: [0.0034823894022493434, 0.0007150348452162257, -0.0020532361418706202,

|

distortion_coeffs: [0.0034823894022493434, 0.0007150348452162257, -0.0020532361418706202,

|

||||||

0.00020293673591811182]

|

0.00020293673591811182]

|

||||||

distortion_model: equidistant

|

distortion_model: pre-equidistant

|

||||||

intrinsics: [190.97847715128717, 190.9733070521226, 254.93170605935475, 256.8974428996504]

|

intrinsics: [190.97847715128717, 190.9733070521226, 254.93170605935475, 256.8974428996504]

|

||||||

resolution: [512, 512]

|

resolution: [512, 512]

|

||||||

rostopic: /cam0/image_raw

|

rostopic: /cam0/image_raw

|

||||||

@ -25,7 +25,7 @@ cam1:

|

|||||||

camera_model: pinhole

|

camera_model: pinhole

|

||||||

distortion_coeffs: [0.0034003170790442797, 0.001766278153469831, -0.00266312569781606,

|

distortion_coeffs: [0.0034003170790442797, 0.001766278153469831, -0.00266312569781606,

|

||||||

0.0003299517423931039]

|

0.0003299517423931039]

|

||||||

distortion_model: equidistant

|

distortion_model: pre-equidistant

|

||||||

intrinsics: [190.44236969414825, 190.4344384721956, 252.59949716835982, 254.91723064636983]

|

intrinsics: [190.44236969414825, 190.4344384721956, 252.59949716835982, 254.91723064636983]

|

||||||

resolution: [512, 512]

|

resolution: [512, 512]

|

||||||

rostopic: /cam1/image_raw

|

rostopic: /cam1/image_raw

|

||||||

|

|||||||

@ -137,6 +137,7 @@ void Rhocost(const Eigen::Isometry3d& T_c0_ci,

|

|||||||

inline bool checkMotion(

|

inline bool checkMotion(

|

||||||

const CamStateServer& cam_states) const;

|

const CamStateServer& cam_states) const;

|

||||||

|

|

||||||

|

|

||||||

/*

|

/*

|

||||||

* @brief InitializeAnchor generates the NxN patch around the

|

* @brief InitializeAnchor generates the NxN patch around the

|

||||||

* feature in the Anchor image

|

* feature in the Anchor image

|

||||||

@ -171,7 +172,6 @@ void Rhocost(const Eigen::Isometry3d& T_c0_ci,

|

|||||||

Eigen::Vector3d& in_p) const;

|

Eigen::Vector3d& in_p) const;

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

/*

|

/*

|

||||||

* @brief project PositionToCamera Takes a 3d position in a world frame

|

* @brief project PositionToCamera Takes a 3d position in a world frame

|

||||||

* and projects it into the passed camera frame using pinhole projection

|

* and projects it into the passed camera frame using pinhole projection

|

||||||

@ -224,7 +224,7 @@ bool VisualizeKernel(

|

|||||||

bool VisualizePatch(

|

bool VisualizePatch(

|

||||||

const CAMState& cam_state,

|

const CAMState& cam_state,

|

||||||

const StateIDType& cam_state_id,

|

const StateIDType& cam_state_id,

|

||||||

CameraCalibration& cam0,

|

CameraCalibration& cam,

|

||||||

const Eigen::VectorXd& photo_r,

|

const Eigen::VectorXd& photo_r,

|

||||||

std::stringstream& ss) const;

|

std::stringstream& ss) const;

|

||||||

/*

|

/*

|

||||||

@ -713,26 +713,22 @@ bool Feature::VisualizeKernel(

|

|||||||

//cv::Sobel(anchorImage, yderImage, CV_8UC1, 0, 1, 3);

|

//cv::Sobel(anchorImage, yderImage, CV_8UC1, 0, 1, 3);

|

||||||

|

|

||||||

|

|

||||||

cv::Mat xderImage2(anchorImage.rows, anchorImage.cols, anchorImage_blurred.type());

|

// cv::Mat xderImage2(anchorImage.rows, anchorImage.cols, anchorImage_blurred.type());

|

||||||

cv::Mat yderImage2(anchorImage.rows, anchorImage.cols, anchorImage_blurred.type());

|

// cv::Mat yderImage2(anchorImage.rows, anchorImage.cols, anchorImage_blurred.type());

|

||||||

|

|

||||||

|

|

||||||

cv::Mat norm_abs_xderImage;

|

|

||||||

cv::normalize(abs_xderImage, norm_abs_xderImage, 0, 255, cv::NORM_MINMAX, CV_8UC1);

|

|

||||||

|

|

||||||

cv::imshow("xder", norm_abs_xderImage);

|

|

||||||

cv::imshow("yder", abs_yderImage);

|

|

||||||

|

|

||||||

|

/*

|

||||||

for(int i = 1; i < anchorImage.rows-1; i++)

|

for(int i = 1; i < anchorImage.rows-1; i++)

|

||||||

for(int j = 1; j < anchorImage.cols-1; j++)

|

for(int j = 1; j < anchorImage.cols-1; j++)

|

||||||

xderImage2.at<uint8_t>(j,i) = 255.*fabs(Kernel(cv::Point2f(i,j), anchorImage_blurred, "Sobel_x"));

|

xderImage2.at<uint8_t>(j,i) = 255.*fabs(Kernel(cv::Point2f(i,j), anchorImage_blurred, "Sobel_x"));

|

||||||

|

|

||||||

|

|

||||||

for(int i = 1; i < anchorImage.rows-1; i++)

|

for(int i = 1; i < anchorImage.rows-1; i++)

|

||||||

for(int j = 1; j < anchorImage.cols-1; j++)

|

for(int j = 1; j < anchorImage.cols-1; j++)

|

||||||

yderImage2.at<uint8_t>(j,i) = 255.*fabs(Kernel(cv::Point2f(i,j), anchorImage_blurred, "Sobel_y"));

|

yderImage2.at<uint8_t>(j,i) = 255.*fabs(Kernel(cv::Point2f(i,j), anchorImage_blurred, "Sobel_y"));

|

||||||

cv::imshow("anchor", anchorImage);

|

*/

|

||||||

cv::imshow("xder2", xderImage2);

|

//cv::imshow("anchor", anchorImage);

|

||||||

cv::imshow("yder2", yderImage2);

|

cv::imshow("xder2", xderImage);

|

||||||

|

cv::imshow("yder2", yderImage);

|

||||||

|

|

||||||

cvWaitKey(0);

|

cvWaitKey(0);

|

||||||

}

|

}

|

||||||

@ -740,7 +736,7 @@ bool Feature::VisualizeKernel(

|

|||||||

bool Feature::VisualizePatch(

|

bool Feature::VisualizePatch(

|

||||||

const CAMState& cam_state,

|

const CAMState& cam_state,

|

||||||

const StateIDType& cam_state_id,

|

const StateIDType& cam_state_id,

|

||||||

CameraCalibration& cam0,

|

CameraCalibration& cam,

|

||||||

const Eigen::VectorXd& photo_r,

|

const Eigen::VectorXd& photo_r,

|

||||||

std::stringstream& ss) const

|

std::stringstream& ss) const

|

||||||

{

|

{

|

||||||

@ -749,7 +745,7 @@ bool Feature::VisualizePatch(

|

|||||||

|

|

||||||

//visu - anchor

|

//visu - anchor

|

||||||

auto anchor = observations.begin();

|

auto anchor = observations.begin();

|

||||||

cv::Mat anchorImage = cam0.moving_window.find(anchor->first)->second.image;

|

cv::Mat anchorImage = cam.moving_window.find(anchor->first)->second.image;

|

||||||

cv::Mat dottedFrame(anchorImage.size(), CV_8UC3);

|

cv::Mat dottedFrame(anchorImage.size(), CV_8UC3);

|

||||||

cv::cvtColor(anchorImage, dottedFrame, CV_GRAY2RGB);

|

cv::cvtColor(anchorImage, dottedFrame, CV_GRAY2RGB);

|

||||||

|

|

||||||

@ -761,10 +757,10 @@ bool Feature::VisualizePatch(

|

|||||||

cv::Point ys(point.x, point.y);

|

cv::Point ys(point.x, point.y);

|

||||||

cv::rectangle(dottedFrame, xs, ys, cv::Scalar(0,255,255));

|

cv::rectangle(dottedFrame, xs, ys, cv::Scalar(0,255,255));

|

||||||

}

|

}

|

||||||

cam0.featureVisu = dottedFrame.clone();

|

cam.featureVisu = dottedFrame.clone();

|

||||||

|

|

||||||

// visu - feature

|

// visu - feature

|

||||||

cv::Mat current_image = cam0.moving_window.find(cam_state_id)->second.image;

|

cv::Mat current_image = cam.moving_window.find(cam_state_id)->second.image;

|

||||||

cv::cvtColor(current_image, dottedFrame, CV_GRAY2RGB);

|

cv::cvtColor(current_image, dottedFrame, CV_GRAY2RGB);

|

||||||

|

|

||||||

// set position in frame

|

// set position in frame

|

||||||

@ -772,7 +768,7 @@ bool Feature::VisualizePatch(

|

|||||||

std::vector<double> projectionPatch;

|

std::vector<double> projectionPatch;

|

||||||

for(auto point : anchorPatch_3d)

|

for(auto point : anchorPatch_3d)

|

||||||

{

|

{

|

||||||

cv::Point2f p_in_c0 = projectPositionToCamera(cam_state, cam_state_id, cam0, point);

|

cv::Point2f p_in_c0 = projectPositionToCamera(cam_state, cam_state_id, cam, point);

|

||||||

projectionPatch.push_back(PixelIrradiance(p_in_c0, current_image));

|

projectionPatch.push_back(PixelIrradiance(p_in_c0, current_image));

|

||||||

// visu - feature

|

// visu - feature

|

||||||

cv::Point xs(p_in_c0.x, p_in_c0.y);

|

cv::Point xs(p_in_c0.x, p_in_c0.y);

|

||||||

@ -780,7 +776,7 @@ bool Feature::VisualizePatch(

|

|||||||

cv::rectangle(dottedFrame, xs, ys, cv::Scalar(0,255,0));

|

cv::rectangle(dottedFrame, xs, ys, cv::Scalar(0,255,0));

|

||||||

}

|

}

|

||||||

|

|

||||||

cv::hconcat(cam0.featureVisu, dottedFrame, cam0.featureVisu);

|

cv::hconcat(cam.featureVisu, dottedFrame, cam.featureVisu);

|

||||||

|

|

||||||

|

|

||||||

// patches visualization

|

// patches visualization

|

||||||

@ -827,10 +823,14 @@ bool Feature::VisualizePatch(

|

|||||||

cv::putText(irradianceFrame, namer.str() , cvPoint(30, 65+scale*2*N),

|

cv::putText(irradianceFrame, namer.str() , cvPoint(30, 65+scale*2*N),

|

||||||

cv::FONT_HERSHEY_COMPLEX_SMALL, 0.8, cvScalar(0,0,0), 1, CV_AA);

|

cv::FONT_HERSHEY_COMPLEX_SMALL, 0.8, cvScalar(0,0,0), 1, CV_AA);

|

||||||

|

|

||||||

cv::Point2f p_f(observations.find(cam_state_id)->second(0),observations.find(cam_state_id)->second(1));

|

cv::Point2f p_f;

|

||||||

|

if(cam.id == 0)

|

||||||

|

p_f = cv::Point2f(observations.find(cam_state_id)->second(0),observations.find(cam_state_id)->second(1));

|

||||||

|

else if(cam.id == 1)

|

||||||

|

p_f = cv::Point2f(observations.find(cam_state_id)->second(2),observations.find(cam_state_id)->second(3));

|

||||||

// move to real pixels

|

// move to real pixels

|

||||||

p_f = image_handler::distortPoint(p_f, cam0.intrinsics, cam0.distortion_model, cam0.distortion_coeffs);

|

|

||||||

|

|

||||||

|

p_f = image_handler::distortPoint(p_f, cam.intrinsics, cam.distortion_model, cam.distortion_coeffs);

|

||||||

for(int i = 0; i<N; i++)

|

for(int i = 0; i<N; i++)

|

||||||

{

|

{

|

||||||

for(int j = 0; j<N ; j++)

|

for(int j = 0; j<N ; j++)

|

||||||

@ -853,17 +853,17 @@ bool Feature::VisualizePatch(

|

|||||||

|

|

||||||

for(int i = 0; i<N; i++)

|

for(int i = 0; i<N; i++)

|

||||||

for(int j = 0; j<N; j++)

|

for(int j = 0; j<N; j++)

|

||||||

if(photo_r(i*N+j)>0)

|

if(photo_r(2*(i*N+j))>0)

|

||||||

cv::rectangle(irradianceFrame,

|

cv::rectangle(irradianceFrame,

|

||||||

cv::Point(40+scale*(N+i+1), 15+scale*(N/2+j)),

|

cv::Point(40+scale*(N+i+1), 15+scale*(N/2+j)),

|

||||||

cv::Point(40+scale*(N+i), 15+scale*(N/2+j+1)),

|

cv::Point(40+scale*(N+i), 15+scale*(N/2+j+1)),

|

||||||

cv::Scalar(255 - photo_r(i*N+j)*255, 255 - photo_r(i*N+j)*255, 255),

|

cv::Scalar(255 - photo_r(2*(i*N+j)+1)*255, 255 - photo_r(2*(i*N+j)+1)*255, 255),

|

||||||

CV_FILLED);

|

CV_FILLED);

|

||||||

else

|

else

|

||||||

cv::rectangle(irradianceFrame,

|

cv::rectangle(irradianceFrame,

|

||||||

cv::Point(40+scale*(N+i+1), 15+scale*(N/2+j)),

|

cv::Point(40+scale*(N+i+1), 15+scale*(N/2+j)),

|

||||||

cv::Point(40+scale*(N+i), 15+scale*(N/2+j+1)),

|

cv::Point(40+scale*(N+i), 15+scale*(N/2+j+1)),

|

||||||

cv::Scalar(255, 255 + photo_r(i*N+j)*255, 255 + photo_r(i*N+j)*255),

|

cv::Scalar(255, 255 + photo_r(2*(i*N+j)+1)*255, 255 + photo_r(2*(i*N+j)+1)*255),

|

||||||

CV_FILLED);

|

CV_FILLED);

|

||||||

|

|

||||||

// gradient arrow

|

// gradient arrow

|

||||||

@ -884,7 +884,7 @@ bool Feature::VisualizePatch(

|

|||||||

3);

|

3);

|

||||||

*/

|

*/

|

||||||

|

|

||||||

cv::hconcat(cam0.featureVisu, irradianceFrame, cam0.featureVisu);

|

cv::hconcat(cam.featureVisu, irradianceFrame, cam.featureVisu);

|

||||||

|

|

||||||

/*

|

/*

|

||||||

// visualize position of used observations and resulting feature position

|

// visualize position of used observations and resulting feature position

|

||||||

@ -916,15 +916,15 @@ bool Feature::VisualizePatch(

|

|||||||

|

|

||||||

// draw, x y position and arrow with direction - write z next to it

|

// draw, x y position and arrow with direction - write z next to it

|

||||||

|

|

||||||

cv::resize(cam0.featureVisu, cam0.featureVisu, cv::Size(), rescale, rescale);

|

cv::resize(cam.featureVisu, cam.featureVisu, cv::Size(), rescale, rescale);

|

||||||

|

|

||||||

|

|

||||||

cv::hconcat(cam0.featureVisu, positionFrame, cam0.featureVisu);

|

cv::hconcat(cam.featureVisu, positionFrame, cam.featureVisu);

|

||||||

*/

|

*/

|

||||||

// write feature position

|

// write feature position

|

||||||

std::stringstream pos_s;

|

std::stringstream pos_s;

|

||||||

pos_s << "u: " << observations.begin()->second(0) << " v: " << observations.begin()->second(1);

|

pos_s << "u: " << observations.begin()->second(0) << " v: " << observations.begin()->second(1);

|

||||||

cv::putText(cam0.featureVisu, ss.str() , cvPoint(anchorImage.rows + 100, anchorImage.cols - 30),

|

cv::putText(cam.featureVisu, ss.str() , cvPoint(anchorImage.rows + 100, anchorImage.cols - 30),

|

||||||

cv::FONT_HERSHEY_COMPLEX_SMALL, 0.8, cvScalar(200,200,250), 1, CV_AA);

|

cv::FONT_HERSHEY_COMPLEX_SMALL, 0.8, cvScalar(200,200,250), 1, CV_AA);

|

||||||

// create line?

|

// create line?

|

||||||

|

|

||||||

@ -932,9 +932,9 @@ bool Feature::VisualizePatch(

|

|||||||

|

|

||||||

std::stringstream loc;

|

std::stringstream loc;

|

||||||

// loc << "/home/raphael/dev/MSCKF_ws/img/feature_" << std::to_string(ros::Time::now().toSec()) << ".jpg";

|

// loc << "/home/raphael/dev/MSCKF_ws/img/feature_" << std::to_string(ros::Time::now().toSec()) << ".jpg";

|

||||||

//cv::imwrite(loc.str(), cam0.featureVisu);

|

//cv::imwrite(loc.str(), cam.featureVisu);

|

||||||

|

|

||||||

cv::imshow("patch", cam0.featureVisu);

|

cv::imshow("patch", cam.featureVisu);

|

||||||

cvWaitKey(1);

|

cvWaitKey(1);

|

||||||

}

|

}

|

||||||

|

|

||||||

@ -979,8 +979,6 @@ cv::Point2f Feature::pixelDistanceAt(

|

|||||||

return distance;

|

return distance;

|

||||||

}

|

}

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

cv::Point2f Feature::projectPositionToCamera(

|

cv::Point2f Feature::projectPositionToCamera(

|

||||||

const CAMState& cam_state,

|

const CAMState& cam_state,

|

||||||

const StateIDType& cam_state_id,

|

const StateIDType& cam_state_id,

|

||||||

@ -997,7 +995,6 @@ cv::Point2f Feature::projectPositionToCamera(

|

|||||||

Eigen::Matrix3d R_w_c0 = quaternionToRotation(cam_state.orientation);

|

Eigen::Matrix3d R_w_c0 = quaternionToRotation(cam_state.orientation);

|

||||||

const Eigen::Vector3d& t_c0_w = cam_state.position;

|

const Eigen::Vector3d& t_c0_w = cam_state.position;

|

||||||

|

|

||||||

|

|

||||||

// project point according to model

|

// project point according to model

|

||||||

if(cam.id == 0)

|

if(cam.id == 0)

|

||||||

{

|

{

|

||||||

@ -1049,8 +1046,9 @@ bool Feature::initializeAnchor(const CameraCalibration& cam, int N)

|

|||||||

|

|

||||||

auto anchor = observations.begin();

|

auto anchor = observations.begin();

|

||||||

if(cam.moving_window.find(anchor->first) == cam.moving_window.end())

|

if(cam.moving_window.find(anchor->first) == cam.moving_window.end())

|

||||||

|

{

|

||||||

return false;

|

return false;

|

||||||

|

}

|

||||||

cv::Mat anchorImage = cam.moving_window.find(anchor->first)->second.image;

|

cv::Mat anchorImage = cam.moving_window.find(anchor->first)->second.image;

|

||||||

cv::Mat anchorImage_deeper;

|

cv::Mat anchorImage_deeper;

|

||||||

anchorImage.convertTo(anchorImage_deeper,CV_16S);

|

anchorImage.convertTo(anchorImage_deeper,CV_16S);

|

||||||

@ -1073,17 +1071,16 @@ bool Feature::initializeAnchor(const CameraCalibration& cam, int N)

|

|||||||

|

|

||||||

|

|

||||||

// check if image has been pre-undistorted

|

// check if image has been pre-undistorted

|

||||||

if(cam.distortion_model.substr(0,3) == "pre-")

|

if(cam.distortion_model.substr(0,3) == "pre")

|

||||||

{

|

{

|

||||||

std::cout << "is a pre" << std::endl;

|

|

||||||

//project onto pixel plane

|

//project onto pixel plane

|

||||||

undist_anchor_center_pos = cv::Point2f(u * cam.intrinsics[0] + cam.intrinsics[2], v * cam.intrinsics[1] + cam.intrinsics[3]);

|

undist_anchor_center_pos = cv::Point2f(u * cam.intrinsics[0] + cam.intrinsics[2], v * cam.intrinsics[1] + cam.intrinsics[3]);

|

||||||

|

|

||||||

// create vector of patch in pixel plane

|

// create vector of patch in pixel plane

|

||||||

for(double u_run = -n; u_run <= n; u_run++)

|

for(double u_run = -n; u_run <= n; u_run++)

|

||||||

for(double v_run = -n; v_run <= n; v_run++)

|

for(double v_run = -n; v_run <= n; v_run++)

|

||||||

anchorPatch_real.push_back(cv::Point2f(undist_anchor_center_pos.x+u_run, undist_anchor_center_pos.y+v_run));

|

anchorPatch_real.push_back(cv::Point2f(undist_anchor_center_pos.x+u_run, undist_anchor_center_pos.y+v_run));

|

||||||

|

|

||||||

|

|

||||||

//project back into u,v

|

//project back into u,v

|

||||||

for(int i = 0; i < N*N; i++)

|

for(int i = 0; i < N*N; i++)

|

||||||

anchorPatch_ideal.push_back(cv::Point2f((anchorPatch_real[i].x-cam.intrinsics[2])/cam.intrinsics[0], (anchorPatch_real[i].y-cam.intrinsics[3])/cam.intrinsics[1]));

|

anchorPatch_ideal.push_back(cv::Point2f((anchorPatch_real[i].x-cam.intrinsics[2])/cam.intrinsics[0], (anchorPatch_real[i].y-cam.intrinsics[3])/cam.intrinsics[1]));

|

||||||

|

|||||||

@ -320,6 +320,8 @@ private:

|

|||||||

return;

|

return;

|

||||||

}

|

}

|

||||||

|

|

||||||

|

bool STREAMPAUSE;

|

||||||

|

|

||||||

// Indicate if this is the first image message.

|

// Indicate if this is the first image message.

|

||||||

bool is_first_img;

|

bool is_first_img;

|

||||||

|

|

||||||

|

|||||||

@ -202,7 +202,7 @@ class MsckfVio {

|

|||||||

Eigen::Vector4d& r);

|

Eigen::Vector4d& r);

|

||||||

// This function computes the Jacobian of all measurements viewed

|

// This function computes the Jacobian of all measurements viewed

|

||||||

// in the given camera states of this feature.

|

// in the given camera states of this feature.

|

||||||

void featureJacobian(

|

bool featureJacobian(

|

||||||

const FeatureIDType& feature_id,

|

const FeatureIDType& feature_id,

|

||||||

const std::vector<StateIDType>& cam_state_ids,

|

const std::vector<StateIDType>& cam_state_ids,

|

||||||

Eigen::MatrixXd& H_x, Eigen::VectorXd& r);

|

Eigen::MatrixXd& H_x, Eigen::VectorXd& r);

|

||||||

@ -245,7 +245,8 @@ class MsckfVio {

|

|||||||

Eigen::MatrixXd& H_y,

|

Eigen::MatrixXd& H_y,

|

||||||

Eigen::VectorXd& r);

|

Eigen::VectorXd& r);

|

||||||

|

|

||||||

void twodotFeatureJacobian(

|

|

||||||

|

bool twodotFeatureJacobian(

|

||||||

const FeatureIDType& feature_id,

|

const FeatureIDType& feature_id,

|

||||||

const std::vector<StateIDType>& cam_state_ids,

|

const std::vector<StateIDType>& cam_state_ids,

|

||||||

Eigen::MatrixXd& H_x, Eigen::VectorXd& r);

|

Eigen::MatrixXd& H_x, Eigen::VectorXd& r);

|

||||||

@ -282,10 +283,12 @@ class MsckfVio {

|

|||||||

bool nan_flag;

|

bool nan_flag;

|

||||||

bool play;

|

bool play;

|

||||||

double last_time_bound;

|

double last_time_bound;

|

||||||

|

double time_offset;

|

||||||

|

|

||||||

// Patch size for Photometry

|

// Patch size for Photometry

|

||||||

int N;

|

int N;

|

||||||

|

// Image rescale

|

||||||

|

int SCALE;

|

||||||

// Chi squared test table.

|

// Chi squared test table.

|

||||||

static std::map<int, double> chi_squared_test_table;

|

static std::map<int, double> chi_squared_test_table;

|

||||||

|

|

||||||

|

|||||||

@ -11,11 +11,14 @@

|

|||||||

output="screen"

|

output="screen"

|

||||||

>

|

>

|

||||||

|

|

||||||

|

<!-- Debugging Flaggs -->

|

||||||

|

<param name="StreamPause" value="true"/>

|

||||||

|

|

||||||

<rosparam command="load" file="$(arg calibration_file)"/>

|

<rosparam command="load" file="$(arg calibration_file)"/>

|

||||||

<param name="grid_row" value="4"/>

|

<param name="grid_row" value="4"/>

|

||||||

<param name="grid_col" value="4"/>

|

<param name="grid_col" value="4"/>

|

||||||

<param name="grid_min_feature_num" value="5"/>

|

<param name="grid_min_feature_num" value="3"/>

|

||||||

<param name="grid_max_feature_num" value="10"/>

|

<param name="grid_max_feature_num" value="5"/>

|

||||||

<param name="pyramid_levels" value="3"/>

|

<param name="pyramid_levels" value="3"/>

|

||||||

<param name="patch_size" value="15"/>

|

<param name="patch_size" value="15"/>

|

||||||

<param name="fast_threshold" value="10"/>

|

<param name="fast_threshold" value="10"/>

|

||||||

@ -28,6 +31,7 @@

|

|||||||

<remap from="~cam0_image" to="/cam0/image_raw"/>

|

<remap from="~cam0_image" to="/cam0/image_raw"/>

|

||||||

<remap from="~cam1_image" to="/cam1/image_raw"/>

|

<remap from="~cam1_image" to="/cam1/image_raw"/>

|

||||||

|

|

||||||

|

|

||||||

</node>

|

</node>

|

||||||

</group>

|

</group>

|

||||||

|

|

||||||

|

|||||||

@ -11,6 +11,9 @@

|

|||||||

output="screen"

|

output="screen"

|

||||||

>

|

>

|

||||||

|

|

||||||

|

<!-- Debugging Flaggs -->

|

||||||

|

<param name="StreamPause" value="true"/>

|

||||||

|

|

||||||

<rosparam command="load" file="$(arg calibration_file)"/>

|

<rosparam command="load" file="$(arg calibration_file)"/>

|

||||||

<param name="grid_row" value="4"/>

|

<param name="grid_row" value="4"/>

|

||||||

<param name="grid_col" value="4"/>

|

<param name="grid_col" value="4"/>

|

||||||

|

|||||||

@ -17,15 +17,17 @@

|

|||||||

args='standalone msckf_vio/MsckfVioNodelet'

|

args='standalone msckf_vio/MsckfVioNodelet'

|

||||||

output="screen">

|

output="screen">

|

||||||

|

|

||||||

|

|

||||||

<!-- Filter Flag, 0 = msckf, 1 = photometric, 2 = two -->

|

<!-- Filter Flag, 0 = msckf, 1 = photometric, 2 = two -->

|

||||||

<param name="FILTER" value="0"/>

|

<param name="FILTER" value="1"/>

|

||||||

|

|

||||||

<!-- Debugging Flaggs -->

|

<!-- Debugging Flaggs -->

|

||||||

<param name="StreamPause" value="true"/>

|

<param name="StreamPause" value="true"/>

|

||||||

<param name="PrintImages" value="false"/>

|

<param name="PrintImages" value="true"/>

|

||||||

<param name="GroundTruth" value="false"/>

|

<param name="GroundTruth" value="false"/>

|

||||||

|

|

||||||

<param name="patch_size_n" value="3"/>

|

<param name="patch_size_n" value="5"/>

|

||||||

|

<param name="image_scale" value ="1"/>

|

||||||

|

|

||||||

<!-- Calibration parameters -->

|

<!-- Calibration parameters -->

|

||||||

<rosparam command="load" file="$(arg calibration_file)"/>

|

<rosparam command="load" file="$(arg calibration_file)"/>

|

||||||

|

|||||||

@ -17,15 +17,16 @@

|

|||||||

args='standalone msckf_vio/MsckfVioNodelet'

|

args='standalone msckf_vio/MsckfVioNodelet'

|

||||||

output="screen">

|

output="screen">

|

||||||

|

|

||||||

<!-- Filter Flag, 0 = msckf, 1 = photometric, 2 = two -->

|

<param name="FILTER" value="0"/>

|

||||||

<param name="FILTER" value="1"/>

|

|

||||||

|

|

||||||

<!-- Debugging Flaggs -->

|

<!-- Debugging Flaggs -->

|

||||||

<param name="StreamPause" value="true"/>

|

<param name="StreamPause" value="true"/>

|

||||||

<param name="PrintImages" value="false"/>

|

<param name="PrintImages" value="false"/>

|

||||||

<param name="GroundTruth" value="false"/>

|

<param name="GroundTruth" value="false"/>

|

||||||

|

|

||||||

<param name="patch_size_n" value="5"/>

|

<param name="patch_size_n" value="3"/>

|

||||||

|

<param name="image_scale" value ="1"/>

|

||||||

|

|

||||||

<!-- Calibration parameters -->

|

<!-- Calibration parameters -->

|

||||||

<rosparam command="load" file="$(arg calibration_file)"/>

|

<rosparam command="load" file="$(arg calibration_file)"/>

|

||||||

|

|

||||||

@ -33,7 +34,7 @@

|

|||||||

<param name="frame_rate" value="20"/>

|

<param name="frame_rate" value="20"/>

|

||||||

<param name="fixed_frame_id" value="$(arg fixed_frame_id)"/>

|

<param name="fixed_frame_id" value="$(arg fixed_frame_id)"/>

|

||||||

<param name="child_frame_id" value="odom"/>

|

<param name="child_frame_id" value="odom"/>

|

||||||

<param name="max_cam_state_size" value="20"/>

|

<param name="max_cam_state_size" value="12"/>

|

||||||

<param name="position_std_threshold" value="8.0"/>

|

<param name="position_std_threshold" value="8.0"/>

|

||||||

|

|

||||||

<param name="rotation_threshold" value="0.2618"/>

|

<param name="rotation_threshold" value="0.2618"/>

|

||||||

|

|||||||

@ -17,6 +17,7 @@

|

|||||||

args='standalone msckf_vio/MsckfVioNodelet'

|

args='standalone msckf_vio/MsckfVioNodelet'

|

||||||

output="screen">

|

output="screen">

|

||||||

|

|

||||||

|

|

||||||

<!-- Filter Flag, 0 = msckf, 1 = photometric, 2 = two -->

|

<!-- Filter Flag, 0 = msckf, 1 = photometric, 2 = two -->

|

||||||

<param name="FILTER" value="1"/>

|

<param name="FILTER" value="1"/>

|

||||||

|

|

||||||

|

|||||||

64

src/bagcontrol.py

Normal file

64

src/bagcontrol.py

Normal file

@ -0,0 +1,64 @@

|

|||||||

|

#!/usr/bin/env python

|

||||||

|

import rosbag

|

||||||

|

import rospy

|

||||||

|

from sensor_msgs.msg import Imu, Image

|

||||||

|

from geometry_msgs.msg import TransformStamped

|

||||||

|

import time

|

||||||

|

import signal

|

||||||

|

import sys

|

||||||

|

|

||||||

|

|

||||||

|

def signal_handler(sig, frame):

|

||||||

|

print('gracefully exiting the program.')

|

||||||

|

bag.close()

|

||||||

|

sys.exit(0)

|

||||||

|

|

||||||

|

def main():

|

||||||

|

global bag

|

||||||

|

|

||||||

|

cam0_topic = '/cam0/image_raw'

|

||||||

|

cam1_topic = '/cam1/image_raw'

|

||||||

|

imu0_topic = '/imu0'

|

||||||

|

grnd_topic = '/vrpn_client/raw_transform'

|

||||||

|

|

||||||

|

rospy.init_node('controlbag')

|

||||||

|

rospy.set_param('play_bag', False)

|

||||||

|

|

||||||

|

cam0_pub = rospy.Publisher(cam0_topic, Image, queue_size=10)

|

||||||

|

cam1_pub = rospy.Publisher(cam1_topic, Image, queue_size=10)

|

||||||

|

imu0_pub = rospy.Publisher(imu0_topic, Imu, queue_size=10)

|

||||||

|

grnd_pub = rospy.Publisher(grnd_topic, TransformStamped, queue_size=10)

|

||||||

|

|

||||||

|

signal.signal(signal.SIGINT, signal_handler)

|

||||||

|

|

||||||

|

bag = rosbag.Bag('/home/raphael/dev/MSCKF_ws/bag/TUM/dataset-corridor1_1024_16.bag')

|

||||||

|

for topic, msg, t in bag.read_messages(topics=[cam0_topic, cam1_topic, imu0_topic, grnd_topic]):

|

||||||

|

|

||||||

|

# pause if parameter set to false

|

||||||

|

flag = False

|

||||||

|

while not rospy.get_param('/play_bag'):

|

||||||

|

time.sleep(0.01)

|

||||||

|

if not flag:

|

||||||

|

print("stopped playback")

|

||||||

|

flag = not flag

|

||||||

|

|

||||||

|

if flag:

|

||||||

|

print("resume playback")

|

||||||

|

|

||||||

|

if topic == cam0_topic:

|

||||||

|

cam0_pub.publish(msg)

|

||||||

|

|

||||||

|

elif topic == cam1_topic:

|

||||||

|

cam1_pub.publish(msg)

|

||||||

|

|

||||||

|

elif topic == imu0_topic:

|

||||||

|

imu0_pub.publish(msg)

|

||||||

|

|

||||||

|

elif topic ==grnd_topic:

|

||||||

|

grnd_pub.publish(msg)

|

||||||

|

|

||||||

|

#print msg

|

||||||

|

bag.close()

|

||||||

|

|

||||||

|

if __name__== "__main__":

|

||||||

|

main()

|

||||||

@ -40,10 +40,12 @@ void undistortImage(

|

|||||||

cv::fisheye::undistortImage(src, dst, K, distortion_coeffs, K);

|

cv::fisheye::undistortImage(src, dst, K, distortion_coeffs, K);

|

||||||

else if (distortion_model == "equidistant")

|

else if (distortion_model == "equidistant")

|

||||||

src.copyTo(dst);

|

src.copyTo(dst);

|

||||||

|

|

||||||

else if (distortion_model == "pre-radtan")

|

else if (distortion_model == "pre-radtan")

|

||||||

cv::undistort(src, dst, K, distortion_coeffs, K);

|

cv::undistort(src, dst, K, distortion_coeffs, K);

|

||||||

else if (distortion_model == "radtan")

|

else if (distortion_model == "radtan")

|

||||||

src.copyTo(dst);

|

src.copyTo(dst);

|

||||||

|

|

||||||

}

|

}

|

||||||

|

|

||||||

void undistortPoint(

|

void undistortPoint(

|

||||||

@ -95,6 +97,7 @@ void undistortPoint(

|

|||||||

pts_out.push_back(newPoint);

|

pts_out.push_back(newPoint);

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

|

||||||

else if (distortion_model == "pre-equidistant" or distortion_model == "pre-radtan")

|

else if (distortion_model == "pre-equidistant" or distortion_model == "pre-radtan")

|

||||||

{

|

{

|

||||||

std::vector<cv::Point2f> temp_pts_out;

|

std::vector<cv::Point2f> temp_pts_out;

|

||||||

|

|||||||

@ -42,6 +42,9 @@ ImageProcessor::~ImageProcessor() {

|

|||||||

}

|

}

|

||||||

|

|

||||||

bool ImageProcessor::loadParameters() {

|

bool ImageProcessor::loadParameters() {

|

||||||

|

|

||||||

|

// debug parameters

|

||||||

|

nh.param<bool>("StreamPause", STREAMPAUSE, false);

|

||||||

// Camera calibration parameters

|

// Camera calibration parameters

|

||||||

nh.param<string>("cam0/distortion_model",

|

nh.param<string>("cam0/distortion_model",

|

||||||

cam0.distortion_model, string("radtan"));

|

cam0.distortion_model, string("radtan"));

|

||||||

@ -211,7 +214,9 @@ void ImageProcessor::stereoCallback(

|

|||||||

const sensor_msgs::ImageConstPtr& cam0_img,

|

const sensor_msgs::ImageConstPtr& cam0_img,

|

||||||

const sensor_msgs::ImageConstPtr& cam1_img) {

|

const sensor_msgs::ImageConstPtr& cam1_img) {

|

||||||

|

|

||||||

//cout << "==================================" << endl;

|

// stop playing bagfile if printing images

|

||||||

|

//if(STREAMPAUSE)

|

||||||

|

// nh.setParam("/play_bag_image", false);

|

||||||

|

|

||||||

// Get the current image.

|

// Get the current image.

|

||||||

cam0_curr_img_ptr = cv_bridge::toCvShare(cam0_img,

|

cam0_curr_img_ptr = cv_bridge::toCvShare(cam0_img,

|

||||||

@ -221,10 +226,19 @@ void ImageProcessor::stereoCallback(

|

|||||||

|

|

||||||

ros::Time start_time = ros::Time::now();

|

ros::Time start_time = ros::Time::now();

|

||||||

|

|

||||||

image_handler::undistortImage(cam0_curr_img_ptr->image, cam0_curr_img_ptr->image, cam0.distortion_model, cam0.intrinsics, cam0.distortion_coeffs);

|

|

||||||

image_handler::undistortImage(cam1_curr_img_ptr->image, cam1_curr_img_ptr->image, cam1.distortion_model, cam1.intrinsics, cam1.distortion_coeffs);

|

cv::Mat new_cam0;

|

||||||

|

cv::Mat new_cam1;

|

||||||

|

|

||||||

|

image_handler::undistortImage(cam0_curr_img_ptr->image, new_cam0, cam0.distortion_model, cam0.intrinsics, cam0.distortion_coeffs);

|

||||||

|

image_handler::undistortImage(cam1_curr_img_ptr->image, new_cam1, cam1.distortion_model, cam1.intrinsics, cam1.distortion_coeffs);

|

||||||

|

|

||||||

|

new_cam0.copyTo(cam0_curr_img_ptr->image);

|

||||||

|

new_cam1.copyTo(cam1_curr_img_ptr->image);

|

||||||

|

|

||||||

//ROS_INFO("Publishing: %f",

|

//ROS_INFO("Publishing: %f",

|

||||||

// (ros::Time::now()-start_time).toSec());

|

// (ros::Time::now()-start_time).toSec());

|

||||||

|

|

||||||

// Build the image pyramids once since they're used at multiple places

|

// Build the image pyramids once since they're used at multiple places

|

||||||

createImagePyramids();

|

createImagePyramids();

|

||||||

|

|

||||||

@ -251,6 +265,7 @@ void ImageProcessor::stereoCallback(

|

|||||||

// Add new features into the current image.

|

// Add new features into the current image.

|

||||||

start_time = ros::Time::now();

|

start_time = ros::Time::now();

|

||||||

addNewFeatures();

|

addNewFeatures();

|

||||||

|

|

||||||

//ROS_INFO("Addition time: %f",

|

//ROS_INFO("Addition time: %f",

|

||||||

// (ros::Time::now()-start_time).toSec());

|

// (ros::Time::now()-start_time).toSec());

|

||||||

|

|

||||||

@ -279,10 +294,12 @@ void ImageProcessor::stereoCallback(

|

|||||||

// (ros::Time::now()-start_time).toSec());

|

// (ros::Time::now()-start_time).toSec());

|

||||||

|

|

||||||

// Update the previous image and previous features.

|

// Update the previous image and previous features.

|

||||||

|

|

||||||

cam0_prev_img_ptr = cam0_curr_img_ptr;

|

cam0_prev_img_ptr = cam0_curr_img_ptr;

|

||||||

prev_features_ptr = curr_features_ptr;

|

prev_features_ptr = curr_features_ptr;

|

||||||

std::swap(prev_cam0_pyramid_, curr_cam0_pyramid_);

|

std::swap(prev_cam0_pyramid_, curr_cam0_pyramid_);

|

||||||

|

|

||||||

|

|

||||||

// Initialize the current features to empty vectors.

|

// Initialize the current features to empty vectors.

|

||||||

curr_features_ptr.reset(new GridFeatures());

|

curr_features_ptr.reset(new GridFeatures());

|

||||||

for (int code = 0; code <

|

for (int code = 0; code <

|

||||||

@ -290,6 +307,10 @@ void ImageProcessor::stereoCallback(

|

|||||||

(*curr_features_ptr)[code] = vector<FeatureMetaData>(0);

|

(*curr_features_ptr)[code] = vector<FeatureMetaData>(0);

|

||||||

}

|

}

|

||||||

|

|

||||||

|

// stop playing bagfile if printing images

|

||||||

|

//if(STREAMPAUSE)

|

||||||

|

// nh.setParam("/play_bag_image", true);

|

||||||

|

|

||||||

return;

|

return;

|

||||||

}

|

}

|

||||||

|

|

||||||

@ -586,6 +607,7 @@ void ImageProcessor::trackFeatures() {

|

|||||||

++after_ransac;

|

++after_ransac;

|

||||||

}

|

}

|

||||||

|

|

||||||

|

|

||||||

// Compute the tracking rate.

|

// Compute the tracking rate.

|

||||||

int prev_feature_num = 0;

|

int prev_feature_num = 0;

|

||||||

for (const auto& item : *prev_features_ptr)

|

for (const auto& item : *prev_features_ptr)

|

||||||

@ -625,9 +647,14 @@ void ImageProcessor::stereoMatch(

|

|||||||

image_handler::undistortPoints(cam0_points, cam0.intrinsics, cam0.distortion_model,

|

image_handler::undistortPoints(cam0_points, cam0.intrinsics, cam0.distortion_model,

|

||||||

cam0.distortion_coeffs, cam0_points_undistorted,

|

cam0.distortion_coeffs, cam0_points_undistorted,

|

||||||

R_cam0_cam1);

|

R_cam0_cam1);

|

||||||

|

<<<<<<< HEAD

|

||||||

|

cam1_points = distortPoints(cam0_points_undistorted, cam1_intrinsics,

|

||||||

|

cam1_distortion_model, cam1_distortion_coeffs);

|

||||||

|

=======

|

||||||

|

|

||||||

cam1_points = image_handler::distortPoints(cam0_points_undistorted, cam1.intrinsics,

|

cam1_points = image_handler::distortPoints(cam0_points_undistorted, cam1.intrinsics,

|

||||||

cam1.distortion_model, cam1.distortion_coeffs);

|

cam1.distortion_model, cam1.distortion_coeffs);

|

||||||

|

>>>>>>> photometry-jakobi

|

||||||

}

|

}

|

||||||

|

|

||||||

// Track features using LK optical flow method.

|

// Track features using LK optical flow method.

|

||||||

@ -665,6 +692,8 @@ void ImageProcessor::stereoMatch(

|

|||||||

|

|

||||||

// Further remove outliers based on the known

|

// Further remove outliers based on the known

|

||||||

// essential matrix.

|

// essential matrix.

|

||||||

|

|

||||||

|

|

||||||

vector<cv::Point2f> cam0_points_undistorted(0);

|

vector<cv::Point2f> cam0_points_undistorted(0);

|

||||||

vector<cv::Point2f> cam1_points_undistorted(0);

|

vector<cv::Point2f> cam1_points_undistorted(0);

|

||||||

image_handler::undistortPoints(

|

image_handler::undistortPoints(

|

||||||

@ -674,6 +703,7 @@ void ImageProcessor::stereoMatch(

|

|||||||

cam1_points, cam1.intrinsics, cam1.distortion_model,

|

cam1_points, cam1.intrinsics, cam1.distortion_model,

|

||||||

cam1.distortion_coeffs, cam1_points_undistorted);

|

cam1.distortion_coeffs, cam1_points_undistorted);

|

||||||

|

|

||||||

|

|

||||||

double norm_pixel_unit = 4.0 / (

|

double norm_pixel_unit = 4.0 / (

|

||||||

cam0.intrinsics[0]+cam0.intrinsics[1]+

|

cam0.intrinsics[0]+cam0.intrinsics[1]+

|

||||||

cam1.intrinsics[0]+cam1.intrinsics[1]);

|

cam1.intrinsics[0]+cam1.intrinsics[1]);

|

||||||

|

|||||||

@ -50,7 +50,7 @@ Isometry3d CAMState::T_cam0_cam1 = Isometry3d::Identity();

|

|||||||

|

|

||||||

// Static member variables in Feature class.

|

// Static member variables in Feature class.

|

||||||

FeatureIDType Feature::next_id = 0;

|

FeatureIDType Feature::next_id = 0;

|

||||||

double Feature::observation_noise = 0.05;

|

double Feature::observation_noise = 0.01;

|

||||||

Feature::OptimizationConfig Feature::optimization_config;

|

Feature::OptimizationConfig Feature::optimization_config;

|

||||||

|

|

||||||

map<int, double> MsckfVio::chi_squared_test_table;

|

map<int, double> MsckfVio::chi_squared_test_table;

|

||||||

@ -137,6 +137,8 @@ bool MsckfVio::loadParameters() {

|

|||||||

// Get the photometric patch size

|

// Get the photometric patch size

|

||||||

nh.param<int>("patch_size_n",

|

nh.param<int>("patch_size_n",

|

||||||

N, 3);

|

N, 3);

|

||||||

|

// Get rescaling factor for image

|

||||||

|

nh.param<int>("image_scale", SCALE, 1);

|

||||||

|

|

||||||

// get camera information (used for back projection)

|

// get camera information (used for back projection)

|

||||||

nh.param<string>("cam0/distortion_model",

|

nh.param<string>("cam0/distortion_model",

|

||||||

@ -407,6 +409,7 @@ void MsckfVio::imageCallback(

|

|||||||

// stop playing bagfile if printing images

|

// stop playing bagfile if printing images

|

||||||

if(STREAMPAUSE)

|

if(STREAMPAUSE)

|

||||||

nh.setParam("/play_bag", false);

|

nh.setParam("/play_bag", false);

|

||||||

|

|

||||||

// Return if the gravity vector has not been set.

|

// Return if the gravity vector has not been set.

|

||||||

if (!is_gravity_set)

|

if (!is_gravity_set)

|

||||||

{

|

{

|

||||||

@ -424,6 +427,8 @@ void MsckfVio::imageCallback(

|

|||||||

// the origin.

|

// the origin.

|

||||||

if (is_first_img) {

|

if (is_first_img) {

|

||||||

is_first_img = false;

|

is_first_img = false;

|

||||||

|

|

||||||

|

time_offset = feature_msg->header.stamp.toSec();

|

||||||

state_server.imu_state.time = feature_msg->header.stamp.toSec();

|

state_server.imu_state.time = feature_msg->header.stamp.toSec();

|

||||||

}

|

}

|

||||||

static double max_processing_time = 0.0;

|

static double max_processing_time = 0.0;

|

||||||

@ -467,6 +472,7 @@ void MsckfVio::imageCallback(

|

|||||||

|

|

||||||

|

|

||||||

// cout << "4" << endl;

|

// cout << "4" << endl;

|

||||||

|

|

||||||

// Perform measurement update if necessary.

|

// Perform measurement update if necessary.

|

||||||

start_time = ros::Time::now();

|

start_time = ros::Time::now();

|

||||||

removeLostFeatures();

|

removeLostFeatures();

|

||||||

@ -475,7 +481,7 @@ void MsckfVio::imageCallback(

|

|||||||

|

|

||||||

// cout << "5" << endl;

|

// cout << "5" << endl;

|

||||||

start_time = ros::Time::now();

|

start_time = ros::Time::now();

|

||||||

pruneLastCamStateBuffer();

|

pruneCamStateBuffer();

|

||||||

double prune_cam_states_time = (

|

double prune_cam_states_time = (

|

||||||

ros::Time::now()-start_time).toSec();

|

ros::Time::now()-start_time).toSec();

|

||||||

|

|

||||||

@ -542,13 +548,15 @@ void MsckfVio::manageMovingWindow(

|

|||||||

cv_bridge::CvImageConstPtr cam1_img_ptr = cv_bridge::toCvShare(cam1_img,

|

cv_bridge::CvImageConstPtr cam1_img_ptr = cv_bridge::toCvShare(cam1_img,

|

||||||

sensor_msgs::image_encodings::MONO8);

|

sensor_msgs::image_encodings::MONO8);

|

||||||

|

|

||||||

image_handler::undistortImage(cam0_img_ptr->image, cam0_img_ptr->image, cam0.distortion_model, cam0.intrinsics, cam0.distortion_coeffs);

|

cv::Mat new_cam0;

|

||||||

image_handler::undistortImage(cam1_img_ptr->image, cam1_img_ptr->image, cam1.distortion_model, cam1.intrinsics, cam1.distortion_coeffs);

|

cv::Mat new_cam1;

|

||||||

|

|

||||||

|

image_handler::undistortImage(cam0_img_ptr->image, new_cam0, cam0.distortion_model, cam0.intrinsics, cam0.distortion_coeffs);

|

||||||

|

image_handler::undistortImage(cam1_img_ptr->image, new_cam1, cam1.distortion_model, cam1.intrinsics, cam1.distortion_coeffs);

|

||||||

|

|

||||||

// save image information into moving window

|

// save image information into moving window

|

||||||

cam0.moving_window[state_server.imu_state.id].image = cam0_img_ptr->image.clone();

|

cam0.moving_window[state_server.imu_state.id].image = new_cam0.clone();

|

||||||

cam1.moving_window[state_server.imu_state.id].image = cam1_img_ptr->image.clone();

|

cam1.moving_window[state_server.imu_state.id].image = new_cam1.clone();

|

||||||

|

|

||||||

cv::Mat xder;

|

cv::Mat xder;

|

||||||

cv::Mat yder;

|

cv::Mat yder;

|

||||||

@ -573,20 +581,19 @@ void MsckfVio::manageMovingWindow(

|

|||||||

yder/=96.;

|

yder/=96.;

|

||||||

|

|

||||||

|

|

||||||

/*

|

/*

|

||||||

cv::Mat norm_abs_xderImage;

|

cv::Mat norm_abs_xderImage;

|

||||||

cv::convertScaleAbs(xder, norm_abs_xderImage);

|

cv::convertScaleAbs(xder, norm_abs_xderImage);

|

||||||

cv::normalize(norm_abs_xderImage, norm_abs_xderImage, 0, 255, cv::NORM_MINMAX, CV_8UC1);

|

cv::normalize(norm_abs_xderImage, norm_abs_xderImage, 0, 255, cv::NORM_MINMAX, CV_8UC1);

|

||||||

|

|

||||||

cv::imshow("xder", norm_abs_xderImage);

|

cv::imshow("xder", norm_abs_xderImage);

|

||||||

cvWaitKey(0);

|

cvWaitKey(0);

|

||||||

*/

|

*/

|

||||||

|

|

||||||

// save into moving window

|

// save into moving window

|

||||||

cam1.moving_window[state_server.imu_state.id].dximage = xder.clone();

|

cam1.moving_window[state_server.imu_state.id].dximage = xder.clone();

|

||||||

cam1.moving_window[state_server.imu_state.id].dyimage = yder.clone();

|

cam1.moving_window[state_server.imu_state.id].dyimage = yder.clone();

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

//TODO handle any massive overflow correctly (should be pruned, before this ever triggers)

|

//TODO handle any massive overflow correctly (should be pruned, before this ever triggers)

|

||||||

while(cam0.moving_window.size() > 100)

|

while(cam0.moving_window.size() > 100)

|

||||||

{

|

{

|

||||||

@ -786,7 +793,7 @@ void MsckfVio::mocapOdomCallback(const nav_msgs::OdometryConstPtr& msg) {

|

|||||||

void MsckfVio::calcErrorState()

|

void MsckfVio::calcErrorState()

|

||||||

{

|

{

|

||||||

|

|

||||||

// imu error

|

// imu "error"

|

||||||

err_state_server.imu_state.id = state_server.imu_state.id;

|

err_state_server.imu_state.id = state_server.imu_state.id;

|

||||||

err_state_server.imu_state.time = state_server.imu_state.time;

|

err_state_server.imu_state.time = state_server.imu_state.time;

|

||||||

|

|

||||||

@ -832,9 +839,9 @@ void MsckfVio::batchTruthProcessing(const double& time_bound) {

|

|||||||

|

|

||||||

// Counter how many IMU msgs in the buffer are used.

|

// Counter how many IMU msgs in the buffer are used.

|

||||||

int used_truth_msg_cntr = 0;

|

int used_truth_msg_cntr = 0;

|

||||||

|

double truth_time;

|

||||||

for (const auto& truth_msg : truth_msg_buffer) {

|

for (const auto& truth_msg : truth_msg_buffer) {

|

||||||

double truth_time = truth_msg.header.stamp.toSec();

|

truth_time = truth_msg.header.stamp.toSec();

|

||||||

if (truth_time < true_state_server.imu_state.time) {

|

if (truth_time < true_state_server.imu_state.time) {

|

||||||

++used_truth_msg_cntr;

|

++used_truth_msg_cntr;

|

||||||

continue;

|

continue;

|

||||||

@ -854,6 +861,7 @@ void MsckfVio::batchTruthProcessing(const double& time_bound) {

|

|||||||

// Execute process model.

|

// Execute process model.

|

||||||

processTruthtoIMU(truth_time, m_rotation, m_translation);

|

processTruthtoIMU(truth_time, m_rotation, m_translation);

|

||||||

++used_truth_msg_cntr;

|

++used_truth_msg_cntr;

|

||||||

|

|

||||||

}

|

}

|

||||||

last_time_bound = time_bound;

|

last_time_bound = time_bound;

|

||||||

|

|

||||||

@ -864,6 +872,31 @@ void MsckfVio::batchTruthProcessing(const double& time_bound) {

|

|||||||

truth_msg_buffer.erase(truth_msg_buffer.begin(),

|

truth_msg_buffer.erase(truth_msg_buffer.begin(),

|

||||||

truth_msg_buffer.begin()+used_truth_msg_cntr);

|

truth_msg_buffer.begin()+used_truth_msg_cntr);

|

||||||

|

|

||||||

|

std::ofstream logfile;

|

||||||

|

|

||||||

|

int FileHandler;

|

||||||

|

char FileBuffer[1024];

|

||||||

|

float load = 0;

|

||||||

|

|

||||||

|

FileHandler = open("/proc/loadavg", O_RDONLY);

|

||||||

|

if( FileHandler >= 0) {

|

||||||

|

auto file = read(FileHandler, FileBuffer, sizeof(FileBuffer) - 1);

|

||||||

|

sscanf(FileBuffer, "%f", &load);

|

||||||

|

close(FileHandler);

|

||||||

|

}

|

||||||

|

|

||||||

|

auto gt = true_state_server.imu_state.position;

|

||||||

|

auto gr = true_state_server.imu_state.orientation;

|

||||||

|

logfile.open("/home/raphael/dev/MSCKF_ws/bag/log.txt", std::ios_base::app);

|

||||||

|

//ros time, cpu load , ground truth, estimation, ros time

|

||||||

|

logfile << true_state_server.imu_state.time - time_offset << "; " << load << "; ";

|

||||||

|

logfile << gt[0] << "; " << gt[1] << "; " << gt[2] << "; " << gr[0] << "; " << gr[1] << "; " << gr[2] << "; " << gr[3] << ";";

|

||||||

|

|

||||||

|

// estimation

|

||||||

|

auto et = state_server.imu_state.position;

|

||||||

|

auto er = state_server.imu_state.orientation;

|

||||||

|

logfile << et[0] << "; " << et[1] << "; " << et[2] << "; " << er[0] << "; " << er[1] << "; " << er[2] << "; " << er[3] << "; \n" << endl;;

|

||||||

|

logfile.close();

|

||||||

/*

|

/*

|

||||||

// calculate change

|

// calculate change

|

||||||

delta_position = state_server.imu_state.position - old_imu_state.position;

|

delta_position = state_server.imu_state.position - old_imu_state.position;

|

||||||

@ -1459,11 +1492,13 @@ void MsckfVio::twodotMeasurementJacobian(

|

|||||||

return;

|

return;

|

||||||

}

|

}

|

||||||

|

|

||||||

void MsckfVio::twodotFeatureJacobian(

|

bool MsckfVio::twodotFeatureJacobian(

|

||||||

const FeatureIDType& feature_id,

|

const FeatureIDType& feature_id,

|

||||||

const std::vector<StateIDType>& cam_state_ids,

|

const std::vector<StateIDType>& cam_state_ids,

|

||||||

MatrixXd& H_x, VectorXd& r)

|

MatrixXd& H_x, VectorXd& r)

|

||||||

{

|

{

|

||||||

|

if(FILTER != 2)

|

||||||

|

return false;

|

||||||

|

|

||||||

const auto& feature = map_server[feature_id];

|

const auto& feature = map_server[feature_id];

|

||||||

|

|

||||||

@ -1485,7 +1520,9 @@ void MsckfVio::twodotFeatureJacobian(

|

|||||||

|

|

||||||

MatrixXd H_xi = MatrixXd::Zero(jacobian_row_size,

|

MatrixXd H_xi = MatrixXd::Zero(jacobian_row_size,

|

||||||

21+state_server.cam_states.size()*7);

|

21+state_server.cam_states.size()*7);

|

||||||

|

|

||||||

MatrixXd H_yi = MatrixXd::Zero(jacobian_row_size, 1);

|

MatrixXd H_yi = MatrixXd::Zero(jacobian_row_size, 1);

|

||||||

|

|

||||||

VectorXd r_i = VectorXd::Zero(jacobian_row_size);

|

VectorXd r_i = VectorXd::Zero(jacobian_row_size);

|

||||||

int stack_cntr = 0;

|

int stack_cntr = 0;

|

||||||

|

|

||||||

@ -1493,6 +1530,7 @@ void MsckfVio::twodotFeatureJacobian(

|

|||||||

|

|

||||||

MatrixXd H_xl;

|

MatrixXd H_xl;

|

||||||

MatrixXd H_yl;

|

MatrixXd H_yl;

|

||||||

|

|

||||||

Eigen::VectorXd r_l = VectorXd::Zero(4);

|

Eigen::VectorXd r_l = VectorXd::Zero(4);

|

||||||

|

|

||||||

twodotMeasurementJacobian(cam_id, feature.id, H_xl, H_yl, r_l);

|

twodotMeasurementJacobian(cam_id, feature.id, H_xl, H_yl, r_l);

|

||||||

@ -1503,10 +1541,14 @@ void MsckfVio::twodotFeatureJacobian(

|

|||||||

// Stack the Jacobians.

|

// Stack the Jacobians.

|

||||||

H_xi.block(stack_cntr, 0, H_xl.rows(), H_xl.cols()) = H_xl;

|

H_xi.block(stack_cntr, 0, H_xl.rows(), H_xl.cols()) = H_xl;

|

||||||

H_yi.block(stack_cntr, 0, H_yl.rows(), H_yl.cols()) = H_yl;

|

H_yi.block(stack_cntr, 0, H_yl.rows(), H_yl.cols()) = H_yl;

|

||||||

|

|

||||||

r_i.segment(stack_cntr, 4) = r_l;

|

r_i.segment(stack_cntr, 4) = r_l;

|

||||||

stack_cntr += 4;

|

stack_cntr += 4;

|

||||||

}

|

}

|

||||||

|

|

||||||

|

|

||||||

|

H_xi.conservativeResize(stack_cntr, H_xi.cols());

|

||||||

|

H_yi.conservativeResize(stack_cntr, H_yi.cols());

|

||||||

// Project the residual and Jacobians onto the nullspace

|

// Project the residual and Jacobians onto the nullspace

|

||||||

// of H_yj.

|

// of H_yj.

|

||||||

|

|

||||||

@ -1554,7 +1596,7 @@ void MsckfVio::twodotFeatureJacobian(

|

|||||||

std::cout << "resume playback" << std::endl;

|

std::cout << "resume playback" << std::endl;

|

||||||

nh.setParam("/play_bag", true);

|

nh.setParam("/play_bag", true);

|

||||||

}

|

}

|

||||||

return;

|

return true;

|

||||||

}

|

}

|

||||||

|

|

||||||

|

|

||||||

@ -1697,8 +1739,6 @@ bool MsckfVio::PhotometricPatchPointJacobian(

|

|||||||

dI_dhj(1, 2) = dx_c1 * cam1.intrinsics[0];

|

dI_dhj(1, 2) = dx_c1 * cam1.intrinsics[0];

|

||||||

dI_dhj(1, 3) = dy_c1 * cam1.intrinsics[1];

|

dI_dhj(1, 3) = dy_c1 * cam1.intrinsics[1];

|

||||||

|

|

||||||

//cout << dI_dhj(0, 0) << ", " << dI_dhj(0, 1) << endl;

|

|

||||||

|

|

||||||

// add jacobian

|

// add jacobian

|

||||||

|

|

||||||

//dh / d{}^Cp_{ij}

|

//dh / d{}^Cp_{ij}

|

||||||

@ -1828,7 +1868,7 @@ bool MsckfVio::PhotometricMeasurementJacobian(

|

|||||||

|

|

||||||

// visualizing functions

|

// visualizing functions

|

||||||

feature.MarkerGeneration(marker_pub, state_server.cam_states);

|

feature.MarkerGeneration(marker_pub, state_server.cam_states);

|

||||||

feature.VisualizePatch(cam_state, cam_state_id, cam0, r_photo, ss);

|

feature.VisualizePatch(cam_state, cam_state_id, cam1, r_photo, ss);

|

||||||

//feature.VisualizeKernel(cam_state, cam_state_id, cam0);

|

//feature.VisualizeKernel(cam_state, cam_state_id, cam0);

|

||||||

}

|

}

|

||||||

|

|

||||||

@ -1884,6 +1924,8 @@ bool MsckfVio::PhotometricFeatureJacobian(

|

|||||||

const std::vector<StateIDType>& cam_state_ids,

|

const std::vector<StateIDType>& cam_state_ids,

|

||||||

MatrixXd& H_x, VectorXd& r)

|

MatrixXd& H_x, VectorXd& r)

|

||||||

{

|

{

|

||||||

|

if(FILTER != 1)

|

||||||

|

return false;

|

||||||

|

|

||||||

const auto& feature = map_server[feature_id];

|

const auto& feature = map_server[feature_id];

|

||||||

|

|

||||||

@ -1918,7 +1960,9 @@ bool MsckfVio::PhotometricFeatureJacobian(

|

|||||||

|

|

||||||

// skip observation if measurement is not valid

|

// skip observation if measurement is not valid

|

||||||

if(not PhotometricMeasurementJacobian(cam_id, feature.id, H_xl, H_yl, r_l))

|

if(not PhotometricMeasurementJacobian(cam_id, feature.id, H_xl, H_yl, r_l))

|

||||||

|

{

|

||||||

continue;

|

continue;

|

||||||

|

}

|

||||||

|

|

||||||

// set size of stacking jacobians, once the returned jacobians are known

|

// set size of stacking jacobians, once the returned jacobians are known

|

||||||

if(first)

|

if(first)

|

||||||

@ -2094,12 +2138,15 @@ void MsckfVio::measurementJacobian(

|

|||||||

return;

|

return;

|

||||||

}

|

}

|

||||||

|

|

||||||

void MsckfVio::featureJacobian(

|

bool MsckfVio::featureJacobian(

|

||||||

const FeatureIDType& feature_id,

|

const FeatureIDType& feature_id,

|

||||||

const std::vector<StateIDType>& cam_state_ids,

|

const std::vector<StateIDType>& cam_state_ids,

|

||||||

MatrixXd& H_x, VectorXd& r)

|

MatrixXd& H_x, VectorXd& r)

|

||||||

{

|

{

|

||||||

// stop playing bagfile if printing images

|

|

||||||

|

|

||||||

|

if(FILTER != 0)

|

||||||

|

return false;

|

||||||

|

|

||||||

const auto& feature = map_server[feature_id];

|

const auto& feature = map_server[feature_id];

|

||||||

|

|